Software Detects Glass Walls With 96% Accuracy Without Using Costly Sensors: In today’s world of gleaming skyscrapers, modern offices, and high-tech homes, glass walls and partitions are everywhere. Their transparency and elegance make spaces look open and inviting, but they also pose a unique challenge: how do we detect glass walls safely and affordably, especially for robots and automated systems? The answer, until recently, was to rely on expensive sensors or risk costly accidents. Now, a new wave of software that detects glass walls with 96% accuracy without using costly sensors is transforming this landscape, making environments safer and smarter for everyone.

Let’s break down what this technology means, how it works, and why it matters—whether you’re a curious student, a robotics engineer, or a building manager looking to future-proof your property.

Table of Contents

Software Detects Glass Walls With 96% Accuracy Without Using Costly Sensors

| Feature/Stat | Details |

|---|---|

| Detection Accuracy | 96.77% |

| Hardware Requirements | Standard RGB camera; no costly sensors required |

| Technology | Advanced computer vision and deep learning algorithms |

| Real-world Dataset | 3,916 diverse images (GDD Dataset) |

| Main Applications | Robotics, smart buildings, public safety, drone navigation |

| Official Reference | DGIST Official Website |

Software that detects glass walls with 96% accuracy—without costly sensors—is a breakthrough for robotics, smart buildings, and public safety. By leveraging the power of computer vision and deep learning, this technology makes invisible barriers visible, reducing accidents and opening new possibilities for automation. With easy integration, low cost, and high reliability, it’s set to become a standard feature in the next generation of intelligent environments.

Why Is Glass Wall Detection So Difficult?

Glass is a unique material. It’s transparent, sometimes reflective, and often nearly invisible to the naked eye and to machines. Traditional sensors—like LiDAR, infrared, or ultrasonic—can struggle to detect glass because:

- Transparency: Glass lets light pass through, making it blend into the background.

- Reflections: Changes in lighting and reflections can confuse both humans and machines.

- Lack of Texture: Glass often lacks the texture or color contrast that sensors and cameras use to identify objects.

For robots and automated systems, failing to detect glass can mean accidents, damage, or even safety hazards in public spaces. Imagine a cleaning robot in a shopping mall, a delivery bot in an office, or a drone navigating a glass-walled atrium—without reliable detection, these machines are at risk.

The Traditional Approach: Sensors and Their Limitations

Historically, the solution has been to use specialized sensors:

- LiDAR (Light Detection and Ranging): Uses laser pulses to measure distances. While powerful, LiDAR can misread glass or pass right through it, missing the barrier entirely.

- Ultrasonic Sensors: Send sound waves to detect obstacles. These can sometimes pick up glass but are often unreliable due to the smooth surface and angle of incidence.

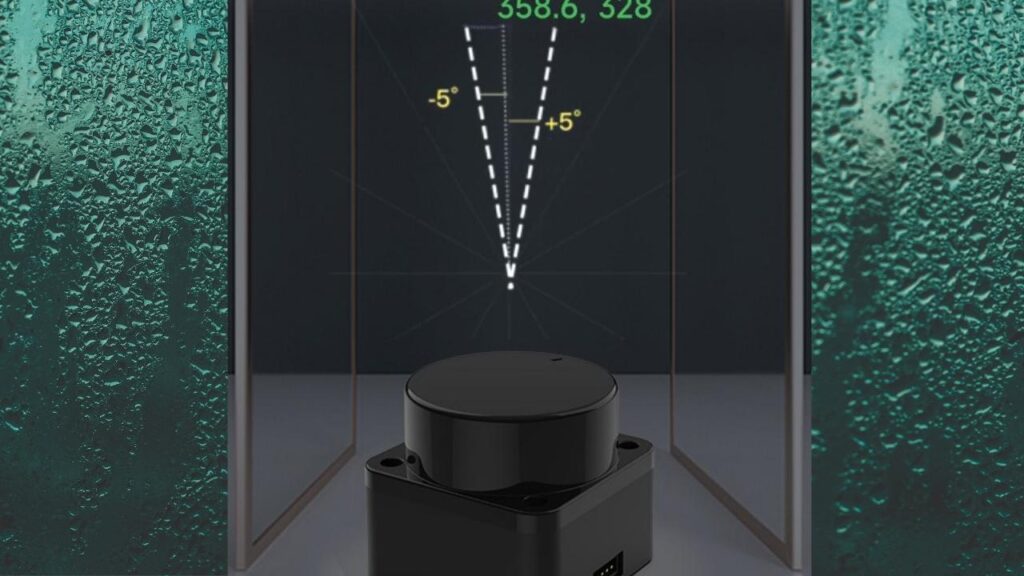

- mmWave Radar: Recent advances in millimeter-wave radar sensors have improved glass detection, offering advantages like immunity to environmental conditions (rain, dust, fog) and the ability to detect both glass and objects behind it. However, these sensors still add cost and complexity to robotic systems.

While these technologies are effective in some scenarios, they come with significant drawbacks:

- High Cost: Advanced sensors are expensive, making them impractical for widespread deployment.

- Complex Integration: Adding new sensors often requires redesigning hardware and software.

- Environmental Sensitivity: Some sensors are affected by lighting, weather, or surface cleanliness.

The Game-Changer: Software-Based Glass Wall Detection

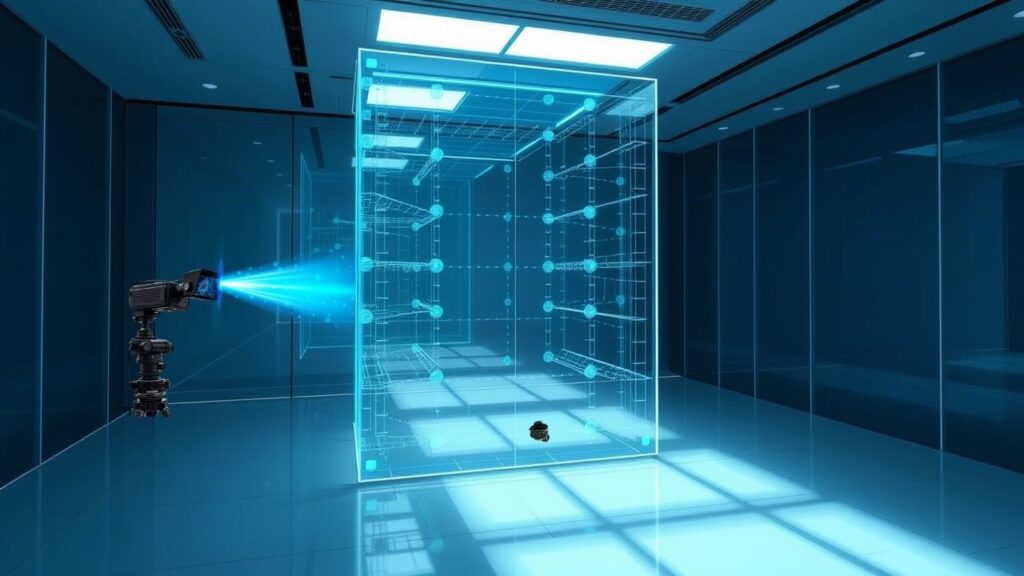

The breakthrough comes from smart software that uses standard cameras—like those already found on most robots, security systems, and smartphones—to detect glass walls with up to 96.77% accuracy1. This leap forward is powered by advances in computer vision and deep learning, allowing machines to “see” glass much like a human would.

How Does the Software Work?

Let’s break it down into simple steps:

1. Image Capture

- The system uses a regular RGB camera to take pictures of the environment.

- No need for expensive or specialized hardware.

2. Feature Extraction

- The software analyzes each image for subtle clues: reflections, changes in brightness, faint outlines, and context (like door handles or frames).

- It looks for patterns that typically indicate the presence of glass.

3. Contextual Analysis

- Advanced algorithms mimic human reasoning, considering the relationship between objects and their surroundings.

- For example, if a chair appears “cut off” or distorted, it might be behind glass.

4. Deep Learning Model

- The core of the system is a neural network trained on thousands of real-world images—3,916 in the latest dataset.

- The model learns to recognize glass in a variety of settings: offices, malls, homes, and outdoor scenes.

5. Detection and Output

- The software marks glass regions in the image, allowing robots or building systems to avoid them.

- In tests, the system achieved a 96.77% accuracy rate, outperforming many traditional methods.

Real-World Applications and Benefits

Robotics

Robots that clean, deliver, or patrol in buildings can now navigate safely around glass walls, reducing the risk of collisions and damage. For example, a cleaning robot equipped with this software can efficiently clean glass façades without bumping into them, improving both safety and efficiency.

Smart Buildings

Building management systems can map out glass partitions, improving emergency response, cleaning schedules, and space utilization. Security cameras can also use this technology to better monitor glass doors and windows for break-ins or accidents.

Public Safety

In airports, malls, and hospitals, preventing accidents with glass walls is crucial. This software can help keep both humans and machines safe by making invisible barriers visible.

Drones and Autonomous Vehicles

Drones flying indoors or in urban environments can avoid crashing into glass walls or windows, expanding their usefulness in inspection, delivery, and surveillance.

How to Implement Glass Wall Detection Software

For Building Managers

- Upgrade Security and Cleaning Systems: Integrate the software with existing CCTV or cleaning robots to enhance safety and efficiency.

- Emergency Planning: Use glass detection to improve evacuation routes and emergency response plans.

For Robotics Engineers

- Enhance Navigation: Add glass detection to the robot’s perception stack, reducing the need for costly hardware upgrades.

- Open-Source Tools: Many implementations are compatible with Robot Operating System (ROS), making integration straightforward for developers.

For Developers

- Leverage Public Datasets: Use datasets like the GDD to train and test your own models.

- Customize for Your Environment: Fine-tune the software for specific lighting, architecture, or use cases.

The Science: Why This Software Works So Well

Large-Field Contextual Feature Integration

The key innovation is the use of large-field contextual feature integration. This means the software doesn’t just look at a single pixel or edge—it considers the whole scene, learning how glass interacts with its environment. This is similar to how a person might notice a faint reflection or the way light bends behind a glass partition.

Deep Learning and Real-World Data

By training on a huge variety of images, the neural network learns to spot glass even in tricky situations: dark rooms, bright sunlight, cluttered backgrounds, or unusual angles. This makes the system robust and reliable in real-world settings.

Comparisons: Software vs. Sensor-Based Detection

| Feature | Software-Based (Camera + AI) | Sensor-Based (LiDAR, mmWave, Ultrasonic) |

|---|---|---|

| Cost | Low (uses existing cameras) | High (specialized hardware required) |

| Integration | Easy (software update) | Complex (hardware and software changes) |

| Accuracy (Glass) | 96.77% (in tests) | Variable; often lower for glass |

| Environmental Robustness | Dependent on lighting, but improving | mmWave: robust; others: variable |

| Scalability | High | Limited by hardware cost |

| Maintenance | Low | Moderate to high |

Study Measures Thermodynamic Properties of Quark-Gluon Plasma With New Precision

Custom Polymers Offer Efficient Electrochemical Separations for Greener Drug Production

New Geopolymer Tech Transforms Glass and Waste Into Ultra-Durable Green Building Material

FAQs About Software Detects Glass Walls With 96% Accuracy Without Using Costly Sensors

Q: How accurate is the software in real-world conditions?

A: In peer-reviewed experiments, the software achieved a 96.77% detection accuracy for glass walls using only standard cameras1. Results may vary depending on lighting and scene complexity, but it consistently outperforms traditional camera-only methods.

Q: Does this replace all other sensors?

A: While the software is highly effective for glass detection, other sensors (like mmWave radar) may still be needed for full environmental awareness, especially in challenging conditions like darkness or heavy fog.

Q: Is the software available for commercial use?

A: The technology has been demonstrated in research and is moving toward commercial deployment. For updates and licensing, check with institutions like DGIST or explore open-source projects on platforms like GitHub.

Q: Can I use this with existing robots or cameras?

A: Yes! The main advantage is compatibility with standard RGB cameras, making it easy to retrofit existing systems without major hardware changes.

Q: What if my building has unusual lighting or architecture?

A: The software is trained on a diverse dataset, but for best results, you can fine-tune the model with images from your specific environment.

Practical Advice for Professionals

Test Before Deploying: Run pilot tests in your specific environment to validate detection accuracy.

- Combine with Other Sensors: For critical safety applications, consider combining software-based detection with mmWave radar or ultrasonic sensors for redundancy.

- Stay Updated: Follow research from leading institutions and industry groups to access the latest improvements and datasets.

- Educate Your Team: Train staff on how the system works and how to respond to alerts or detections.