Method for Training AI to Handle Complex Tasks: Researchers Develop Better Method for Training AI to Handle Complex Tasks, a breakthrough first introduced by MIT in November 2024, means teaching artificial intelligence systems to learn smarter, not harder. Instead of throwing the AI at hundreds of training scenarios, it focuses on a few key tasks—then applies what it’s learned broadly. This approach, combining curriculum learning and Model-Based Transfer Learning (MBTL), achieves up to 50× faster training while maintaining performance across varied challenges.

Imagine teaching a child multiplication by first starting with easy sums, then scaling up to harder problems. That’s exactly the structured learning strategy researchers are now using for AI.

Method for Training AI to Handle Complex Tasks

| Key Highlights | Details |

|---|---|

| Efficiency Gain | Up to 50× faster training on simulated tasks, including traffic control and speed advisories |

| Curriculum Learning | Trains on easier tasks first (akin to kindergarten) before moving to harder tasks |

| Task Selection via MBTL | Chooses a small subset of core tasks using predictive modeling for optimal generalization |

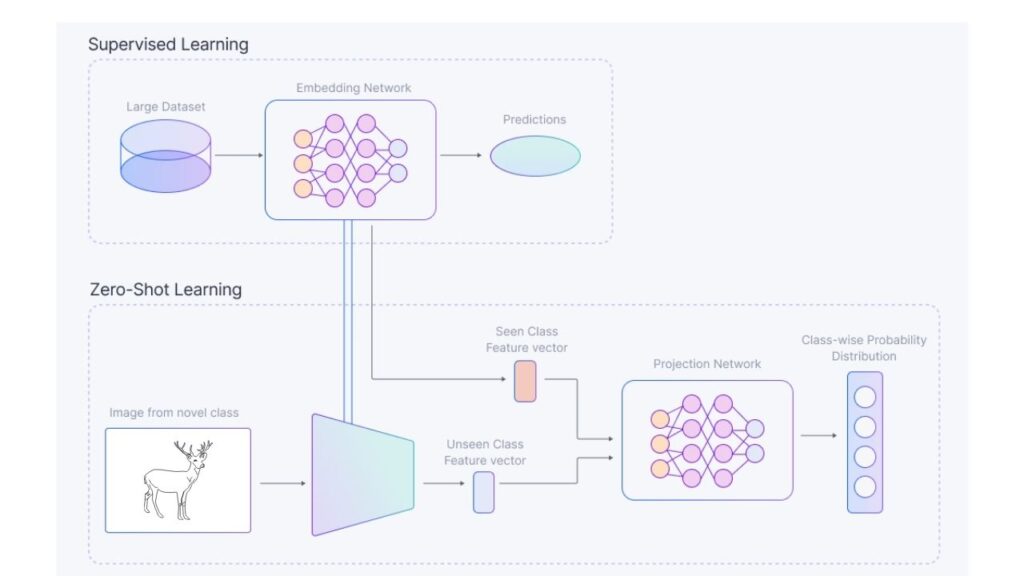

| Zero-Shot Transfer | Applies trained models effectively to new, unseen tasks without additional training |

| Practical Applications | Proven in traffic systems, robotics (e.g., Mini Cheetah), and warehouse automation |

| Professional Takeaway | Lower costs, faster deployment, and better generalization for AI systems in industry and research |

The new method—combining curriculum learning, MBTL task selection, and zero-shot generalization—marks a significant shift away from brute-force AI training. It delivers up to 50× speed improvements, excellent real-world adaptability, and substantial cost reductions. As this technique gains traction, domains like robotics, urban planning, automation, and AI-driven services are poised for smarter, faster innovation. Embracing this structured learning framework empowers professionals to build AI systems that are efficient, generalizable, and ready for real world complexity.

Why This Matters

Training AI through brute force—feeding it massive datasets or hundreds of scenarios—is slow, expensive, and often inflexible. When environments or tasks change slightly, such AI models tend to perform poorly. That’s because traditional reinforcement learning (RL) struggles with variability and generalization. In contrast, the curriculum + MBTL method mimics how humans build knowledge: step by step, from easy to complex.

For instance, MIT tested MBTL in simulations of traffic signal control and speed advisories. By selecting only a few representative intersections, the AI performed just as well—or better—across all test scenarios, using just 2–20% of the training data. That’s a 5–50× reduction in resource Usage.

How It Works: The Methodology

1. Curriculum Learning

Curriculum learning organizes training examples by difficulty. According to the survey by Bengio et al. (2009), and later expanded upon in IEEE and JMLR reviews, this technique helps models converge faster and generalize better.

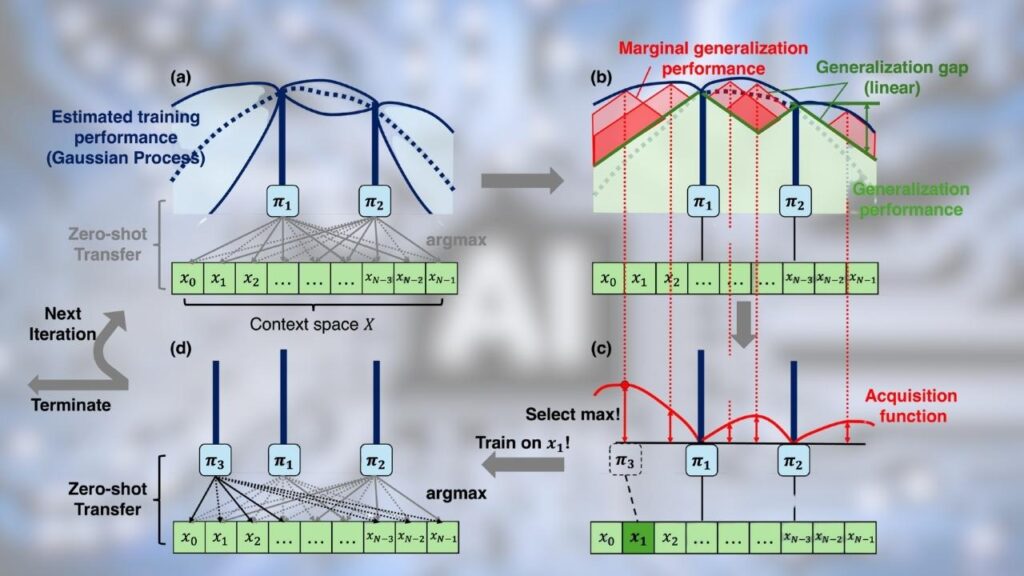

2. Model-Based Transfer Learning (MBTL)

MBTL predicts which specific tasks will help most with generalization. Using a two-step model:

- Predict performance on one task.

- Predict how that performance transfers to others.

This allows sequential selection of tasks that maximize overall performance improvement.

3. Zero-Shot Transfer

After training on the curated set, the AI applies learned strategies directly to unseen scenarios—no further training needed. This helps ensure scalability and efficiency.

Real-World Success Stories

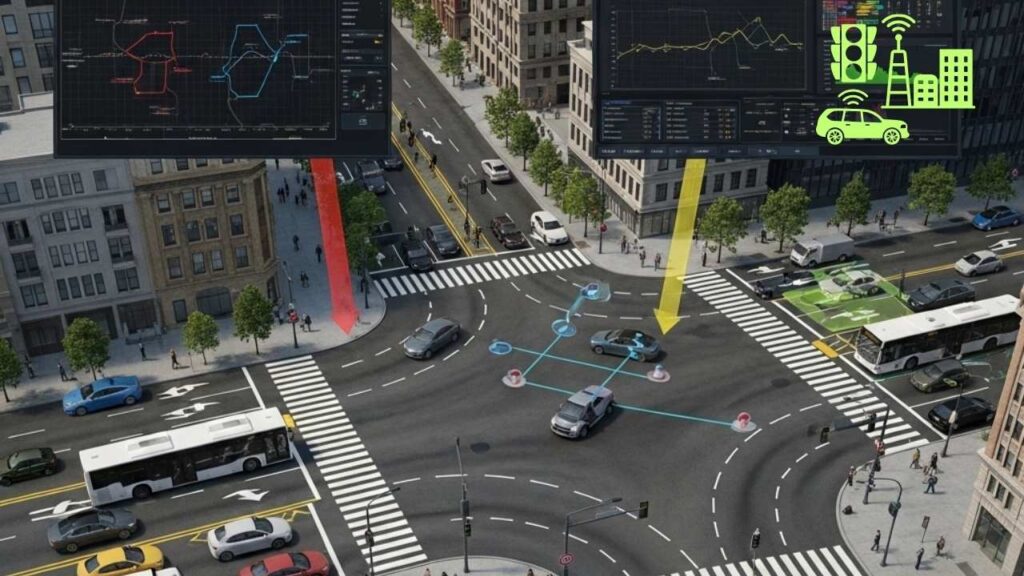

Traffic Management

MIT’s experiments involved simulated city intersections with varying vehicle types, lanes, and regulations.

Training on just 2 representative intersections, MBTL-enabled AI managed all others effectively—using a fraction of traditional compute time.

Robotics: Mini Cheetah

MIT researchers trained the four-legged Mini Cheetah robot through simulation on multiple terrains, achieving stable running at 9 mph. The AI not only learned efficiently but also adapted to real-world environments without vision-based feedback.

Warehouse Automation

Covariant’s warehouse robots use imitation and reinforcement learning to pick diverse items—demonstrating that real-world robot learning benefits directly from structured training frameworks.

Contact-Rich Industrial Tasks

An arXiv study showed curriculum-based learning in simulated robotic insertion tasks led to 86% real-world success, using just a fifth of the training samples required by less structured techniques.

Step-by-Step Guide for Implementing in Projects

- Define Task Space

Break down your overall goal into related task variations (e.g., types of routes, assembly tasks). - Design Curriculum Order

Start with easy, low-variation tasks. Progressively introduce complexity to foster foundational learning. - Apply MBTL Task Selection

Use predictive models to estimate which tasks best enhance generalizable performance—choose a small, representative set. - Train Your Model

Use reinforcement or supervised learning on the selected tasks. Track performance metrics continuously. - Test Zero-Shot

Deploy the model on unseen tasks. Evaluate success rates, error patterns, and adaptability. - Refine Iteratively

If some scenarios underperform, revisit curriculum steps or add pivotal tasks. Continuous monitoring is key.

Why Professionals Should Adopt This

- Cost efficiency: Drastically reduce computing and energy resources.

- Faster iteration: Move from prototype to real-world testing more quickly.

- Robust scalability: Easily adapt models to new but related environments.

- Competitive edge: Be at the forefront of deploying intelligent systems in varied fields.

This approach is relevant across industries—smart cities, manufacturing, healthcare, autonomous transport, and customer robotics.

Strange Memory Effect Found Controlling Quantum Atomic Motion on Metals

Bigger Molecules Now Proven to Extend Quantum Charge Flow Like Never Before

Universal Quantum Law Found In Superfluid Helium Shocks Physicists Worldwide

FAQs About Method for Training AI to Handle Complex Tasks

What is curriculum learning?

A method where training data is structured from easy to hard, similar to human education.

What is transfer learning?

Reusing a pre-trained model for new tasks to save time, data, and compute resources.

How does MBTL improve efficiency?

By modeling and selecting tasks that best support generalization, MBTL reduces wasted training on redundant scenarios.

Is this method applicable beyond simulations?

Yes—robotics and industrial automation projects have demonstrated real-world benefits.

What are the limitations?

Curriculum design requires domain knowledge; MBTL models must be accurately trained; zero-shot may fail when tasks are too atypical.

Practical Tips

- Use simulators for controlled, cost-effective training environments.

- Monitor transfer performance after each curriculum stage.

- Vary your task sets to avoid overfitting.

- Log and compare results in both simulation and physical deployments.