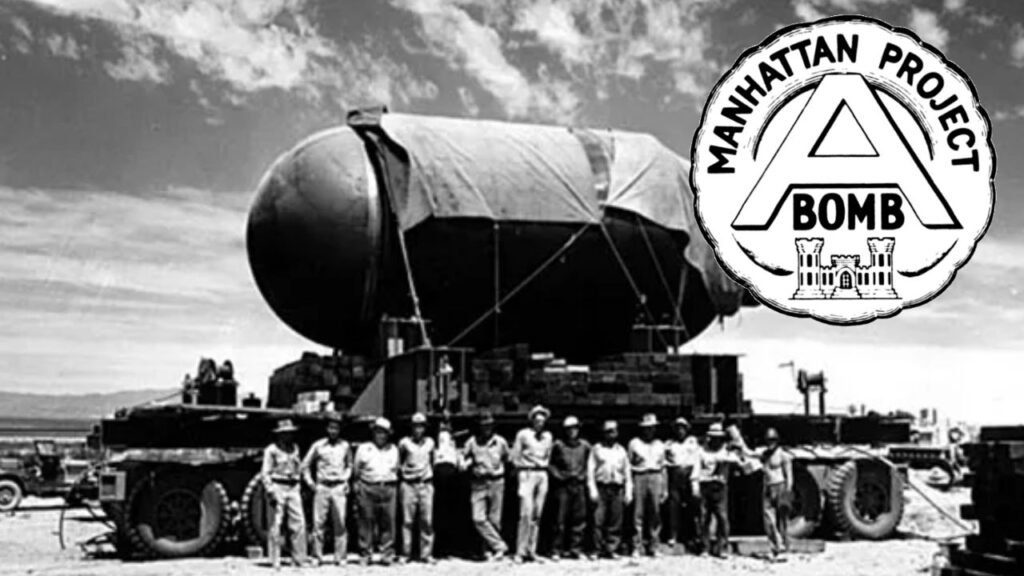

Artificial Intelligence (AI) is reshaping the world at an extraordinary pace. At the forefront is Sam Altman, CEO of OpenAI—the company behind revolutionary AI models like ChatGPT and GPT-4. Altman has drawn a striking comparison between building an AI startup focused on artificial general intelligence (AGI) and the creation of the nuclear bomb during World War II, specifically referencing the Manhattan Project. This analogy highlights the enormous scale, ambition, and ethical dilemmas AI developers face today.

Understanding why a tech visionary would liken AI development to one of the most consequential scientific breakthroughs in history helps us grasp AI’s potential impact and challenges. This article explores that comparison in an accessible yet expert tone, unpacking the context, the stakes, practical insights, and common questions around AI’s future.

Table of Contents

What Was the Manhattan Project and Why Does Sam Altman Refer to It?

The Manhattan Project was a secret U.S. government initiative in World War II to develop the first atomic bomb. It brought together some of the brightest scientists, including physicist Robert Oppenheimer, and resulted in a weapon that changed the course of warfare, politics, and global power forever. The bombings of Hiroshima and Nagasaki marked a new era of immense power paired with existential threat.

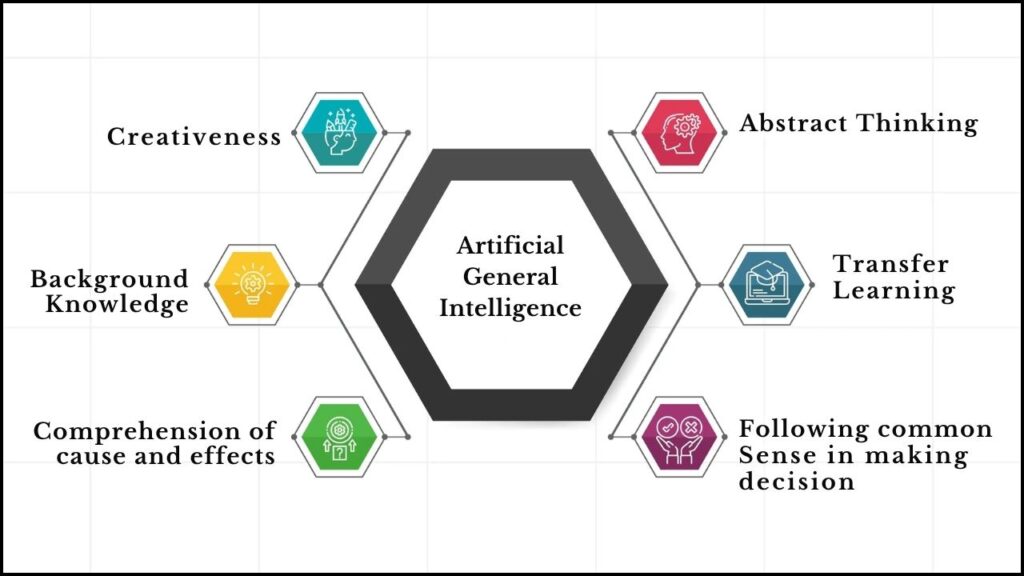

Sam Altman compares OpenAI’s mission to this project because both involve unlocking technology that could transform humanity in profound ways. OpenAI is striving to create Artificial General Intelligence (AGI), AI capable of performing any intellectual task a human can do. Like the atomic bomb, AGI has the potential for enormous benefits but also deep risks.

Sam Altman Compares Building an AI Startup to Creating a Nuclear Bomb

| Topic | Details |

|---|---|

| Scale & Ambition | OpenAI’s goals are likened to the Manhattan Project, emphasizing huge impact and risk. |

| Ethical Challenges | Development involves questions about societal good vs. harm and moral responsibility. |

| Global Competition | AI represents a worldwide race like nuclear arms, necessitating international cooperation. |

| Personal Symbolism | Altman shares the same birthday as Oppenheimer, deepening his connection to the analogy. |

Sam Altman’s comparison of building OpenAI to the Manhattan Project highlights the dual nature of AI technology: immense potential benefits paired with serious risks. His analogy serves as a powerful reminder that AI innovation demands responsible governance, international cooperation, and deep ethical reflection. As AI continues to evolve rapidly, the historical lessons of nuclear technology urge caution to ensure this transformative power builds a better, safer future for all.

Breaking Down Altman’s Nuclear Bomb Analogy: What It Means

1. Scale and Ambition: Enormous Risks and Rewards

Altman compares AI development to the Manhattan Project because both represent scientific breakthroughs of enormous scale and consequence. The atomic bomb irrevocably changed global power dynamics. Similarly, AGI could revolutionize fields—from medicine to education and the economy—but with risks such as misinformation, automation-driven job losses, or worst-case existential dangers.

In this race, companies and nations strive to create advanced AI quickly. Altman warns that it’s a “race against time and regulation” to ensure this powerful technology is developed safely.

2. Ethical Responsibility and Moral Dilemmas

Scientists who built the atomic bomb famously wrestled with the moral weight of their creation. Robert Oppenheimer reflected, quoting the Bhagavad Gita: “Now I am become Death, the destroyer of worlds.” Altman openly shares this sense of ethical tension—wondering if what he builds will ultimately be good or harmful to humanity.

This analogy highlights the importance of ethical stewardship in AI development, where innovation must be balanced with caution and responsibility.

3. Global Competition and the Need for Strong Oversight

Much like the Cold War nuclear arms race, AI development is now a global competition involving companies and nations. This competitive dynamic raises concerns about rushed development without sufficient safety measures.

Altman advocates for international regulation and cooperation, similar to nuclear non-proliferation treaties, to ensure AI does not become a source of catastrophic harm. This includes transparent frameworks for research, deployment, and risk mitigation.

4. Personal and Symbolic Connection

Altman has noted that he shares the same birthday as Oppenheimer—April 22. This personal coincidence adds symbolic meaning for him, reinforcing the seriousness and historical importance of their technological pursuits.

Practical Advice for Professionals and Enthusiasts Engaging with AI Today

- Educate Yourself About AI: Learn the basics of AI and its current capabilities and limitations. Trusted sources include OpenAI’s own blog and organizations dedicated to AI safety and ethics.

- Support Responsible AI Governance: Encourage governments, companies, and global bodies to create clear, effective regulations that evolve with AI technology.

- Consider Ethics in AI Development: For those working in AI, always balance innovation with reflection on societal impacts and long-term consequences.

- Stay Informed on AI Safety Efforts: Follow expert initiatives urging caution, like calls to pause training of extremely powerful AI models until safety protocols mature.

Meta Escalates AI Talent War With OpenAI: The Battle for the Smartest Minds in 2025

FAQs About Sam Altman Compares Building an AI Startup to Creating a Nuclear Bomb

What is Artificial General Intelligence (AGI)?

AGI refers to AI systems that possess human-level intelligence, capable of understanding, learning, and applying knowledge across many tasks, unlike current AI systems that are specialized for narrow tasks (like language or image recognition).

Why does Altman compare AI development to the Manhattan Project?

The analogy emphasizes that AI is not just a technological breakthrough, but a development with potentially historic impact—both positive and negative—requiring serious ethical reflection and governance, much like the development of nuclear weapons.

How safe is AI today?

Current AI technologies are generally safe for many applications but rapidly improving capabilities also introduce new risks. Experts warn that without adequate safety measures and oversight, AI could be misused or cause unintended harms.

What role should governments play in AI?

Governments should develop and enforce laws, standards, and international agreements to make sure AI is developed responsibly and safely, protecting societies from misuse or dangerous outcomes.

How can individuals contribute to AI safety?

Individuals can stay informed, participate in discussions about AI ethics and policy, support responsible AI companies, and advocate for transparency and accountability in AI development.