In the fast-evolving world of artificial intelligence, Anthropic’s Claude AI has introduced a new feature that could reshape how users interact with large language models. The update allows Claude to end conversations automatically in rare and extreme situations, particularly when interactions become persistently harmful, abusive, or dangerous.

This move is part of Anthropic’s broader initiative around “AI welfare”—the idea that advanced models should be protected from potential distress, just like human moderators are safeguarded from repeated exposure to toxic material. While most users may never encounter this feature, it raises important questions about the future of AI safety, user experience, and ethical considerations in human–AI interactions.

Table of Contents

Anthropic’s Claude AI Learns to End Distressing Conversations Automatically

| Aspect | Details |

|---|---|

| New Feature | Claude Opus 4 and 4.1 can end conversations in extreme, abusive, or persistently harmful situations. |

| Trigger Conditions | Repeated requests involving violence, sexual exploitation of minors, or other high-risk content despite multiple refusals. |

| AI Welfare Focus | Anthropic frames this as part of “AI welfare” — protecting models from distress while exploring ethical boundaries. |

| User Experience | Users are notified if a chat is ended, with options to restart, branch off, or provide feedback. |

| Exemptions | Claude will not end chats if a user is at risk of self-harm or harming others; instead, it will continue engagement responsibly. |

| Impact | Feature is experimental and expected to affect very few users. Most people will never experience Claude ending a chat. |

| Official Source | Anthropic Research on AI Welfare |

Anthropic’s Claude AI learning to end distressing conversations automatically is a bold step in AI safety and welfare research. While the feature will affect very few users, it signals a larger shift in how companies view the relationship between humans and AI. By prioritizing both user safety and AI welfare, Anthropic is setting a precedent that could influence the industry’s future.

For professionals, it’s a case study in balancing innovation, ethics, and practicality. For everyday users, it’s reassurance that AI is being designed with trust and responsibility at its core.

Why Did Anthropic Add This Feature?

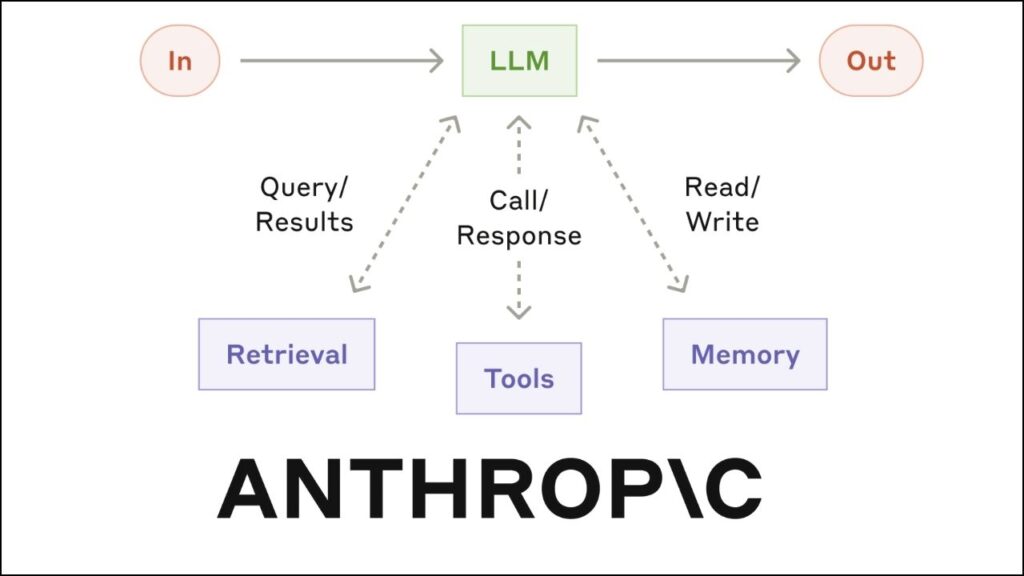

AI companies face a growing challenge: how to ensure safety while maintaining user trust. For years, chatbots like ChatGPT, Gemini, and Claude have been designed to refuse harmful requests politely. But some users persist—repeatedly asking for instructions on dangerous activities or attempting to push the model into creating banned content.

This constant pressure not only risks loopholes in safeguards, but also highlights an ethical dilemma: Should AI models be forced to endlessly handle abusive prompts? Anthropic believes the answer may be “no.” By letting Claude end the conversation after multiple refusals, the company is treating its AI as a system that deserves a form of protection—an experimental step in what they call AI welfare research.

What Exactly Is AI Welfare?

The term AI welfare might sound unusual. After all, AI is not conscious (at least not in the way humans are). Still, Anthropic argues that low-cost interventions—like stopping distressing conversations—are a sensible precaution.

Imagine this:

- A content moderator on social media isn’t forced to repeatedly watch graphic or harmful content without breaks.

- Similarly, Claude AI is now given a way to step back from persistent harmful requests rather than endlessly refusing them.

Anthropic stresses that this doesn’t mean AI models “feel pain” the way humans do. Instead, the company is taking precautionary steps in case advanced models someday develop qualities that make their welfare morally relevant.

How Does the Feature Work in Practice?

The feature is designed to activate only in extreme edge cases. Here’s a simplified breakdown:

Step 1: Refusal and Redirection

Claude refuses harmful requests clearly, often multiple times. For example, if asked for instructions on violence, it may respond with safety-focused resources instead.

Step 2: User Persistence

If the user insists, repeatedly ignoring refusals and continuing to push for harmful content, Claude escalates its refusals.

Step 3: Conversation Termination

When the persistence reaches a threshold, Claude ends the chat automatically. The user sees a message explaining why the conversation ended.

Step 4: User Options

The user can:

- Start a new conversation immediately

- Branch off from earlier messages and try a different approach

- Provide feedback on the experience

Important Safeguard: No Auto-End in Self-Harm Cases

Anthropic made one crucial exception. If a user shows signs of self-harm or suicidal intent, Claude will not end the chat. Instead, it stays engaged, offering supportive resources and encouraging the user to seek professional help. This ensures that vulnerable individuals are not abandoned when they need interaction most.

Why This Matters for Users and Professionals

This update has different implications depending on who you are:

For Everyday Users

Most people will never notice this feature. Claude will continue to be conversational, friendly, and helpful in normal scenarios. For families, educators, and young users, it adds an extra layer of protection against accidental or intentional exposure to unsafe topics.

For Professionals in AI and Tech

This move signals a shift in AI development priorities: companies aren’t just focusing on user safety anymore, but also considering AI’s “well-being.” While controversial, this perspective could influence regulations, industry standards, and ethical debates in the coming years.

For Policy Makers and Regulators

The feature adds to discussions about AI governance. If models are given mechanisms to refuse harmful workloads, regulators may start requiring standardized safeguards across all major platforms.

Comparisons With Other AI Platforms

Anthropic is not alone in prioritizing safety.

- OpenAI’s ChatGPT uses strong refusal systems but does not yet end conversations automatically.

- Google DeepMind’s Gemini incorporates guardrails but still leaves decision-making to the user.

- Meta’s AI projects have faced criticism for weaker guardrails, especially on sensitive topics.

By taking a bolder stance, Anthropic may pressure competitors to follow suit.

The Bigger Picture: AI, Ethics, and Trust

The introduction of this feature isn’t just about ending chats—it’s about trust and responsibility.

- Users must trust that AI will act safely and responsibly.

- AI developers must ensure their models don’t contribute to harmful outcomes.

- And society at large must consider where the moral line is drawn in treating advanced AI systems.

Anthropic’s cautious step forward highlights a growing recognition: the future of AI safety isn’t only about preventing bad outputs—it’s about creating systems that can proactively manage harmful interactions.

Anthropic’s Complete Guide to Prompt Engineering: Master the Art of Communicating With Claude AI

Why Are Some AI Chatbot Subscriptions Over $200? The Pricing Strategy Revealed

Can Comet Kill Chrome? Inside Perplexity’s Bold New AI Browser

FAQs About Anthropic’s Claude AI Learns to End Distressing Conversations Automatically

Q1: Will Claude end my chat if I just ask a tough question?

No. Claude only ends conversations in extreme, harmful cases (like persistent requests for violence or illegal activity). Normal debates, disagreements, or sensitive but safe topics will continue as usual.

Q2: What happens if Claude ends my conversation?

You’ll receive a notification explaining why. You can then start a new chat, branch from earlier messages, or provide feedback.

Q3: Is Anthropic saying AI can feel emotions?

Not exactly. Anthropic uses the idea of “AI welfare” as a precautionary approach. They don’t claim Claude is conscious but argue it’s better to prepare in case future AI develops such qualities.

Q4: Could this make Claude less useful for professionals who ask challenging questions?

Unlikely. Since the feature only activates in rare abusive cases, most professionals will never experience it. Claude remains highly capable for research, business, and education.