Artificial Intelligence (AI) is revolutionizing our world, changing how we live, work, and do business. However, powering advanced AI models developed by leading companies like Alphabet, Microsoft, Amazon, and Meta requires an enormous amount of electricity. The soaring energy demands of AI data centers are reshaping the global energy landscape profoundly.

This article explains Big Tech’s AI power hunger and how these companies plan to satisfy it sustainably. We will break down the numbers, provide practical insights, and explore what this means for the energy sector, tech industries, and professionals.

Table of Contents

Why Is AI Power Consumption So High?

Training and running AI models, especially large-scale ones like GPT-4, demands massive computing resources. For instance, training GPT-4 alone consumed roughly 30 megawatts of power, comparable to the simultaneous electricity use of several thousand U.S. homes.

AI workloads rely on specialized hardware such as GPUs and AI accelerators, which consume about ten times more power per server rack than traditional data center equipment. The explosive popularity of generative AI services like ChatGPT drives tech giants to build more AI-centered data centers, significantly increasing power consumption.

Big Tech’s AI Power Hunger

| Topic | Details |

|---|---|

| AI data center power demand (U.S.) | Expected to rise from 35 gigawatts in 2024 to 78 gigawatts by 2035, more than doubling the current demand |

| Global data center electricity consumption | Projected to more than double by 2030 to approximately 945 terawatt-hours (TWh) due to AI growth |

| AI’s share of data center power | Could reach nearly 50% of total data center electricity consumption by the end of 2025 |

| U.S. data centers’ share of electricity use | May reach 7–12% of total U.S. electricity consumption by 2028, possibly up to 20% with continued growth |

| AI infrastructure investment | Big Tech companies spending tens of billions annually to build and upgrade AI data centers |

| Challenges | Semiconductor shortages, limited grid capacity, cooling requirements, and construction delays |

Big Tech’s insatiable energy demand to power AI is driving a historic expansion in data center electricity consumption, creating a global energy challenge. With demand poised to double or triple in the coming decade, substantial investments in infrastructure, renewable energy, and innovation are essential.

For energy professionals, tech leaders, and businesses, navigating this evolving landscape requires a balance of scale, sustainability, and collaboration. By embracing strategic planning and cutting-edge technologies, the tech industry can ensure AI remains a transformative force without overwhelming the world’s power systems.

For detailed insights, see the International Energy Agency’s Energy and AI report

What’s Driving This Surge in AI Power Demand?

- Rapidly Growing AI Applications: AI is integrated into numerous digital services including search engines, language translation, autonomous vehicles, and health technology — all demanding huge computational power.

- Bigger and More Complex AI Models: Larger models require exponentially more energy due to extensive parallel processing during their development and operation.

- Expanding AI Data Centers: Tech giants are building numerous specialized data centers worldwide with massive energy footprints specifically designed for AI workloads.

- Continuous Operation: AI services run 24/7, requiring uninterrupted power and robust cooling systems to prevent overheating of hardware.

How Big Tech Plans to Feed AI’s Power Hunger

1. Massive Investment in State-of-the-Art Infrastructure

Leading tech firms are investing billions yearly in building new data centers and upgrading existing ones with the latest AI hardware. These centers alone demand energy comparable to powering tens of millions of homes. For example, projects already total about 64 gigawatts of power capacity with plans that could add over 130 gigawatts more.

2. Securing Renewable Energy and Carbon Offsets

To manage carbon footprints, companies are locking in long-term power purchase agreements (PPAs) with renewable energy providers like solar and wind farms. Microsoft, Amazon, and Google are major investors in clean energy projects, aiming to power their AI infrastructure sustainably.

3. Innovating for Greater Energy Efficiency

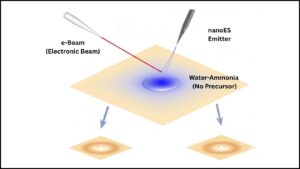

- Advanced Cooling Techniques: Moving from traditional air cooling to liquid immersion and other cutting-edge systems can reduce the energy spent on cooling by over 20%.

- Specialized AI Chips: Companies design AI-specific processors that deliver higher performance while consuming less electricity.

- AI-Driven Optimization: Using AI itself to dynamically control and optimize energy usage in real time enhances efficiency.

4. Strengthening Grid Capacity and Resilience

Collaborations between tech companies and utility providers aim to expand and modernize electrical grids. However, such efforts face hurdles, including a shortage of transformers and semiconductor components, which limit capacity expansions and delay new projects.

Practical Implications for Professionals and Businesses

For Energy Sector Professionals:

- Prepare for Growing Demand: The energy grid will need significant upgrades to handle the impending surge in data center power consumption.

- Explore Renewable Energy Partnerships: There’ll be an increasing demand for green energy supply arrangements tailored for tech giants.

- Focus on Innovation: Opportunities abound in developing more efficient cooling and power management technologies for data centers.

For Tech Industry Leaders:

- Prioritize Sustainable AI: Investment in hardware and algorithms that reduce energy consumption will be critical to controlling operational costs and environmental impact.

- Forge Strategic Alliances: Strong partnerships with energy providers and policymakers will help maintain uninterrupted power supplies.

- Be Transparent: Reporting and communicating AI-related energy use builds trust with customers and regulators.

For Businesses Relying on AI:

- Recognize Hidden Energy Costs: AI-powered services incur significant electricity expenses, which affect pricing, infrastructure planning, and corporate sustainability commitments.

- Choose Efficient AI Solutions: Select or develop AI applications optimized for energy use to balance productivity with sustainability.

A Step-by-Step Guide to Managing AI’s Energy Demand

Step 1: Assess AI Energy Needs Accurately

Use forecasting tools and data from market analysts to estimate the electricity needed for AI workloads before scaling operations.

Step 2: Invest in Efficient Hardware and Cooling Systems

Opt for advanced AI processors known for energy efficiency, and apply innovative cooling solutions like liquid immersion to cut power lost to heat.

Step 3: Secure Reliable and Green Power Sources

Sign long-term renewable energy contracts and locate data centers near strong grid infrastructure or renewable installations.

Step 4: Leverage AI for Energy Management

Implement AI systems to monitor and adjust energy usage in real time, optimizing computing loads and cooling demands dynamically.

Step 5: Collaborate on Policy and Community Integration

Engage local authorities and communities to ensure data center projects align with environmental goals and grid stability requirements.

The AI Boom Is Burning Through Power — Millions Are Already Paying Higher Electricity Bills

America’s Largest Power Grid Feels the Strain as AI Energy Demands Keep Growing

FAQs About Big Tech’s AI Power Hunger

Q1: How much more power do AI data centers consume compared to regular data centers?

They consume about ten times more power per server rack due to AI-specific hardware and workloads.

Q2: Is AI’s electricity consumption sustainable?

It poses challenges, but ongoing investments in renewable energy and efficiency innovations aim to mitigate negative environmental impacts.

Q3: How fast is AI’s power demand growing?

Electricity demand from AI-related data centers is expected to more than double by 2030, with the U.S. share of total electricity usage potentially rising to 7–12% or higher.

Q4: Are there breakthrough technologies to reduce AI’s energy consumption?

Yes, including specialized chips, improved cooling methods, and smarter algorithms, though overall demand growth remains steep.

Q5: Can consumers affect AI’s sustainability?

Consumers can support providers who prioritize green energy and efficient AI infrastructures by choosing their services accordingly.