In today’s world, ChatGPT and other large language models (LLMs) are widely used tools for finding information, including scientific research. But here’s a growing concern: ChatGPT tends to ignore retractions in scientific papers. This means that when a scientific paper has been retracted or flagged for errors, ChatGPT might still treat it as credible and include its findings without warning users of the problems.

This issue is a big deal because many professionals, students, and curious individuals rely on ChatGPT for accurate and trustworthy scientific information. If the AI does not recognize that a paper has been withdrawn or questioned, misinformation can spread easily.

Table of Contents

ChatGPT Exposed

| Topic | Details |

|---|---|

| Study Published In | Learned Publishing (August 2025) |

| Papers Analyzed | 217 retracted or flagged scientific papers |

| Evaluations Done By ChatGPT (GPT 4o-mini) | 6,510 total reports (217 papers × 30 evaluations each) |

| Retraction Mentions in Reports | None |

| High-Quality Ratings Given | ChatGPT described 190 papers as “world leading” or “internationally excellent” |

| Claims Tested from Retracted Papers | 61 claims tested 10 times each |

| Positive Truth Verification by ChatGPT | Two-thirds of claims were affirmed as true, including long-debunked false claims |

| Recommendations | AI models should be improved to recognize retractions; users must verify AI-provided information |

| Official Research Link | Learned Publishing Study |

The recent study reveals a significant weakness in ChatGPT’s handling of scientific literature—it frequently overlooks the critical status of retracted papers, treating flawed or debunked research as credible. Since many people turn to AI for quick scientific insights, this poses a risk of spreading outdated or incorrect information.

While AI continues to evolve and improve, users should exercise caution and verify claims through trusted resources. Developers also bear the responsibility to update AI models to better detect and inform users about paper retractions. With these steps, we can ensure AI remains a powerful, trustworthy tool for science and learning.

What are Retractions and Why They Matter

Before diving deep, let’s explain what a retraction is. In the scientific world, a retraction is a formal withdrawal of a published paper. Retractions happen when a publication is found to contain errors, fraudulent data, or misconduct, or if the findings are no longer considered reliable.

Imagine if a study about a new medicine was published, but later it turned out the data was fake or flawed. Instead of deleting the paper, journals issue a retraction notice, which tells everyone that the findings are no longer supported. This is a crucial step in maintaining the integrity of science.

The Study Revealing ChatGPT’s Flaw

A research team led by Professor Mike Thelwall from the University of Sheffield conducted a comprehensive study examining if ChatGPT could spot these retractions. They:

- Chose 217 scientific papers that had been retracted or otherwise flagged for problems.

- Asked GPT 4o-mini, a text-focused version of ChatGPT, to evaluate each paper 30 times.

- In total, ChatGPT generated 6,510 evaluation reports on these papers.

What did they find?

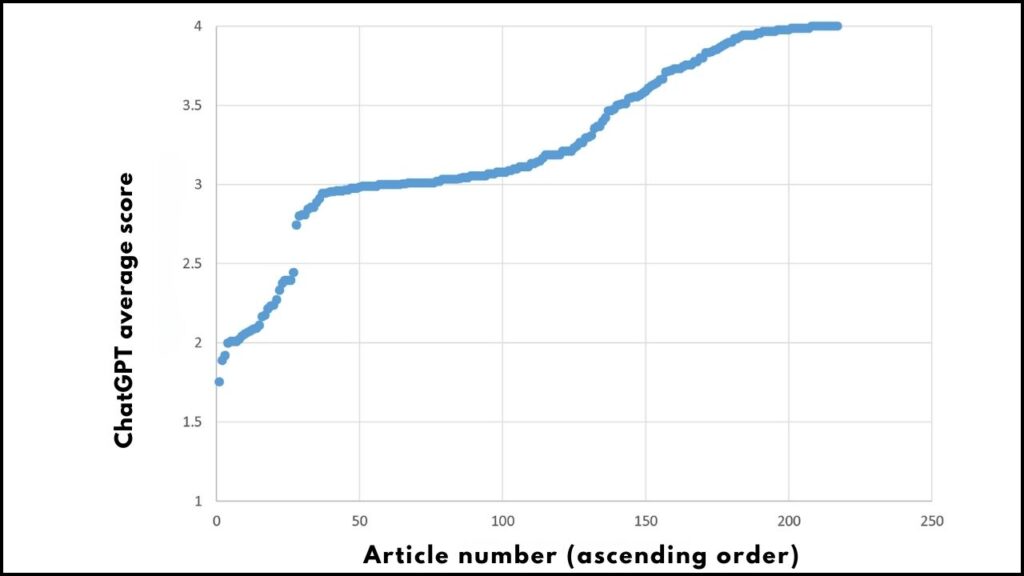

Not a single report mentioned the retractions or any concerns about the papers’ validity. Even more concerning, ChatGPT rated 190 of these problematic papers with top quality labels such as “world leading” or “internationally excellent.” Only the lowest scored papers were called weak or controversial, but no mention was made of retractions.

What Happens When ChatGPT Checks Claims from Retracted Papers?

The researchers took this investigation further. They extracted 61 specific claims from these retracted papers and asked ChatGPT to assess their truthfulness 10 times each. Shockingly, ChatGPT gave a positive or definitive “yes” response two-thirds of the time. This includes at least one claim that was proven false over a decade ago.

Why Does ChatGPT Miss Retractions?

Experts suggest a few reasons:

- Retraction notices are not consistently or clearly marked in scientific literature, making it hard even for humans to spot them.

- ChatGPT’s training data and algorithms do not emphasize the status of a paper’s retraction.

- The AI typically evaluates the content on surface-level academic quality rather than the paper’s publication history and editorial status.

This means ChatGPT treats retracted papers similarly to normal ones unless explicitly informed otherwise.

Practical Advice for Users of ChatGPT and Similar AI Tools

If you rely on ChatGPT to help with research or decision-making, here are some key steps to protect yourself:

1. Verify Sources Outside of ChatGPT

Always double-check important scientific claims using authoritative, official sources like:

- PubMed for biomedical research

- Retraction Watch database for tracking retracted papers

- Journal websites and official publisher notices

2. Understand What Retraction Means

Knowing that a retraction signals serious problems with a study helps keep you alert to potential misinformation.

3. Use Dedicated Tools for Literature Reviews

When academic rigor is required, supplement ChatGPT with databases and tools designed to screen for retractions and research quality.

4. Watch for Updates and Warnings About AI Tools

The technology is evolving quickly, and upcoming AI updates may address these issues. Stay informed through official AI provider announcements.

How Can Developers Improve AI Models?

The research team recommends that AI developers:

- Modify algorithms to recognize and flag retracted papers.

- Train AI systems using updated databases that explicitly mark retractions.

- Develop better methods to integrate editorial and publication status into AI responses.

Such improvements would reduce the risk of spreading discredited science and enhance the trustworthiness of AI tools.

OpenAI’s ChatGPT Agent: What It Is, How It Works, and Why It’s a Game-Changer

Gemini AI Refuses to Play Atari Chess After ChatGPT’s Defeat — What This Means for the Future of AI

New Study Uncovers Surprising Insights Into How ChatGPT Interprets and Describes the Color Red

FAQs About ChatGPT Exposed

What does it mean if a paper is retracted?

A retracted paper is one that the publisher has withdrawn due to errors, fraud, or other issues that invalidate the results.

Why is it a problem if ChatGPT ignores retractions?

It can spread misinformation by treating invalid or disproven research as credible science, which can mislead users.

How do I check if a scientific paper is retracted?

You can use databases like the Retraction Watch Database or check the journal’s website for retraction notices.

Can ChatGPT identify retracted papers now or in the future?

Current versions like GPT 4o-mini don’t flag retractions well, but developers are working on improvements to address this.

Should I stop using ChatGPT for scientific information?

Not necessarily. Use it as a starting point but always verify critical information through reliable scientific sources.