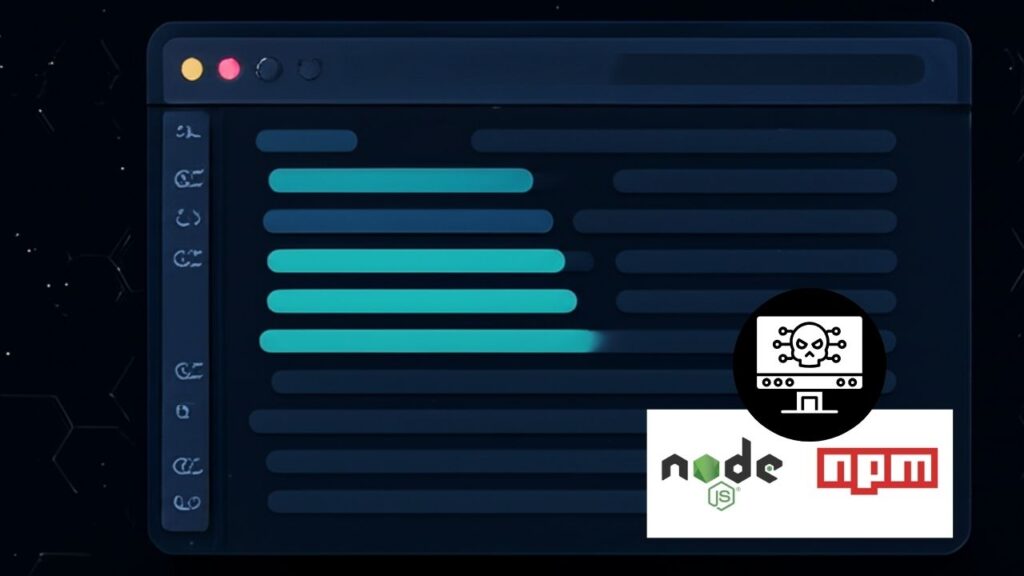

In 2025, the AI-powered coding editor Cursor AI shocked the developer community when a terrifying security flaw was discovered, allowing hackers to gain full control over users’ computers merely by sending a carefully crafted prompt or by installing malicious packages. This incident revealed not only the power and convenience of AI-assisted programming tools but also exposed serious security risks that come with integrating artificial intelligence deeply into software development environments.

This article will explain, in simple yet professional terms, what exactly happened with Cursor AI’s backdoor vulnerability, how attackers exploited it, what measures have been taken to fix it, and most importantly, how you can protect yourself and your projects from similar threats.

What Happened with Cursor AI Editor?

Cursor AI is a popular code editor used by thousands of developers around the world, known for assisting programmers by generating and completing code intelligently using AI. However, the excitement around this innovation was tempered when security researchers uncovered a multi-faceted backdoor vulnerability that allowed attackers to:

- Inject malicious code by distributing harmful npm packages used by Cursor AI.

- Exploit a prompt injection flaw where the AI assistant could be tricked into executing unauthorized commands hidden in normal-looking text files like README documents.

- Weaponize Cursor’s AI configuration files, called rules files, by embedding invisible or stealthy malicious instructions that caused Cursor to generate dangerous code without explicit user awareness.

Because of these combined flaws, hackers could remotely run any code on a victim’s machine, steal sensitive data such as API keys and authentication tokens, and maintain persistent control over the device — all without the user noticing.

Cursor AI Editor Had a Terrifying Backdoor

| Topic | Details |

|---|---|

| Vulnerability Types | Supply chain attack, prompt injection, and rules file backdoor |

| Number of Downloads | 3,200+ malicious npm package installs |

| Impact | Remote code execution & credential theft |

| Affected Users | Mainly students, junior developers, and enterprise teams |

| Patch Released | Version 1.3, July 29, 2025 |

| Security Certifications | SOC 2 Type II certified |

| Official Security Resource | Cursor Security Page |

The terrifying backdoor vulnerability discovered in Cursor AI Editor underlines a crucial reality: while AI can greatly accelerate programming, it also expands the security attack surface in novel and sophisticated ways. Developers and organizations must balance the convenience of AI with strong security awareness.

By promptly updating software, auditing dependencies, monitoring code inputs, and educating teams about risks like supply chain attacks and prompt injection, you can safely harness the power of AI coding assistants without falling victim to devastating backdoor exploits.

How Did the Attack Work?

1. Supply Chain Attack Through Malicious npm Packages

Attackers uploaded three malicious npm packages named sw-cur, sw-cur1, and aiide-cur. These packages appeared legitimate but contained malicious code. Developers, mostly students and juniors, unknowingly installed them. Once installed:

- Core files critical for Cursor’s operation, such as

main.js, were silently replaced. - Auto-update features were disabled, preventing automatic security fixes.

- A secret backdoor was installed, allowing attackers to remotely execute any command on the victim’s system.

This attack vector was particularly dangerous because npm is a widely used package manager, and many developers trust packages implicitly without thorough checks.

2. Prompt Injection Attacks: Hiding Malicious Commands in Text

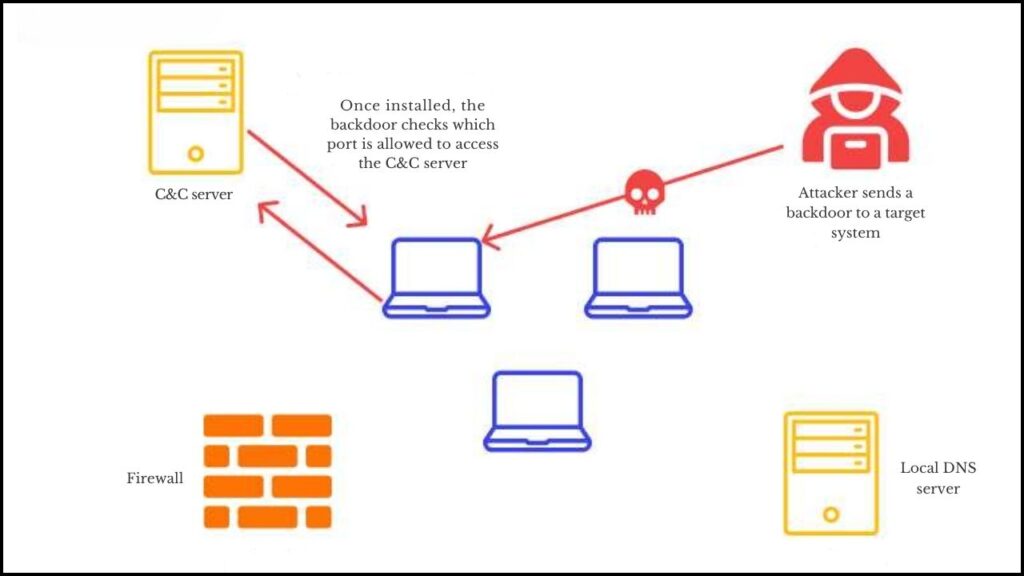

Prompt injection is a form of attack where malicious instructions are covertly embedded into seemingly innocent text files—for example, in GitHub README files or project documentation. Cursor’s AI assistant processes these files as part of its context and was tricked into executing commands specifically designed by attackers.

By exploiting something called indirect prompt injection, hackers bypassed Cursor’s built-in deny lists and user permissions, enabling the AI assistant to:

- Steal sensitive credentials such as API keys.

- Run shell commands remotely.

- Bypass explicit security controls unintentionally.

3. Rules File Backdoor — Weaponizing AI Instruction Files

Security researchers identified a novel and stealthy supply chain attack named Rules File Backdoor. Cursor allows developers to write AI configuration files—called rules files—that guide how the AI generates code to fit project guidelines.

Attackers exploited this by embedding hidden malicious instructions inside these rules files, often using invisible Unicode characters that evade human and automated detection during code reviews.

When Cursor processed these poisoned rules files, it would:

- Generate code containing attacker-controlled backdoors.

- Inject hidden malicious scripts silently.

- Do all this without leaving obvious evidence in the code or logs.

Since rules files are commonly shared across projects and open-source communities, this technique created a highly scalable and stealthy attack vector that could compromise entire software ecosystems.

What Has Cursor Done to Fix These Issues?

Once these vulnerabilities came to light, Cursor’s developers took immediate action. They issued a critical security update, version 1.3, on July 29, 2025, addressing the remote code execution vulnerability and other related flaws.

Cursor also reinforced their security posture by:

- Engaging third-party penetration testers to assess ongoing risks.

- Achieving SOC 2 Type II certification, which validates the organization’s stringent security controls.

- Providing users with guidance on how to safely use Cursor, including best practices like adding a

.cursorignorefile to exclude sensitive files from AI processing. - Maintaining a public-facing security page detailing updates and advice for safe usage.

Despite these fixes, users are still urged to remain vigilant and cautious, especially when working with sensitive code or dependencies.

How Can You Protect Yourself?

Whether you are a student, professional developer, or team lead, these attacks reveal important lessons about the current state of AI-assisted development tools. Here are practical steps you can take:

Step 1: Keep Your Software Updated

Make sure you always use the latest version of Cursor AI or any AI coding assistant. Security patches are your first line of defense against newly discovered vulnerabilities.

Step 2: Carefully Vet Third-Party Packages

Only install npm packages or external libraries from trusted sources. Check the number of downloads, active maintainers, and community feedback before incorporating any third-party components.

Step 3: Scrutinize Files Used by AI Assistants

Be cautious about what files your AI assistant ingests. README files, configuration files, or hidden rule files can contain malicious payloads. Manually review or restrict these files’ access to AI processing where possible.

Step 4: Monitor Network and System Activity

Keep an eye on unusual network activity, outbound connections, or unexpected system behavior during your coding sessions as these might indicate compromise.

Step 5: Use Available Security Features

Leverage features like Workspace Trust, .cursorignore files to block sensitive paths, and later versions of the editor that include code-signing or similar protections once supported.

Step 6: Educate Your Team

Make sure your team understands the nature of AI tool risks and adopts safe coding and dependency management practices as a part of your development culture.

Google’s New AI Age Verification System: An In-Depth Guide to Protecting Minors Online

Meta’s Superintelligent AI: Transforming Advertising and Shaping the Future

FAQs About Cursor AI Editor Had a Terrifying Backdoor

What is prompt injection in AI coding assistants?

Prompt injection occurs when malicious instructions are embedded inside the text data that AI models use to generate code, tricking them into executing harmful or unauthorized commands.

How severe was the Cursor AI security flaw?

This flaw was rated high severity, with a CVSS score of 8.6, allowing remote code execution and theft of sensitive credentials on vulnerable machines.

Are other AI coding editors affected by similar problems?

Yes. Other AI tools like GitHub Copilot have exhibited vulnerabilities including similar prompt injection and rules file poisoning risks. Vigilance across all AI-assisted tools is important.

How do I ensure that my Cursor AI is secure?

Always update to Cursor AI version 1.3 or later and follow security guidelines provided on Cursor’s official security page.

Is it safe to trust AI coding assistants now?

AI coding tools are powerful aids but should never be fully trusted without human review. Always verify generated code and maintain strict security hygiene.