Future of Cloud Computing Depends on Custom-Built Silicon Chips: Cloud computing is changing the way we live, work, and connect. But what’s powering this digital revolution behind the scenes? According to Marvell Technology, the answer is clear: the future of cloud computing depends on custom-built silicon chips. As artificial intelligence (AI) and data-driven applications become more demanding, the need for specialized, high-performance chips is greater than ever.

In this article, we’ll explore why custom silicon is critical for the next era of cloud computing, how Marvell is leading the charge with groundbreaking innovations, and what this means for businesses, technology professionals, and everyday users. Whether you’re a tech enthusiast, a business leader, or just curious about the future of the cloud, this guide will break down the essentials in a way that’s easy to understand and actionable.

Future of Cloud Computing Depends on Custom-Built Silicon Chips

| Feature/Fact | Details |

|---|---|

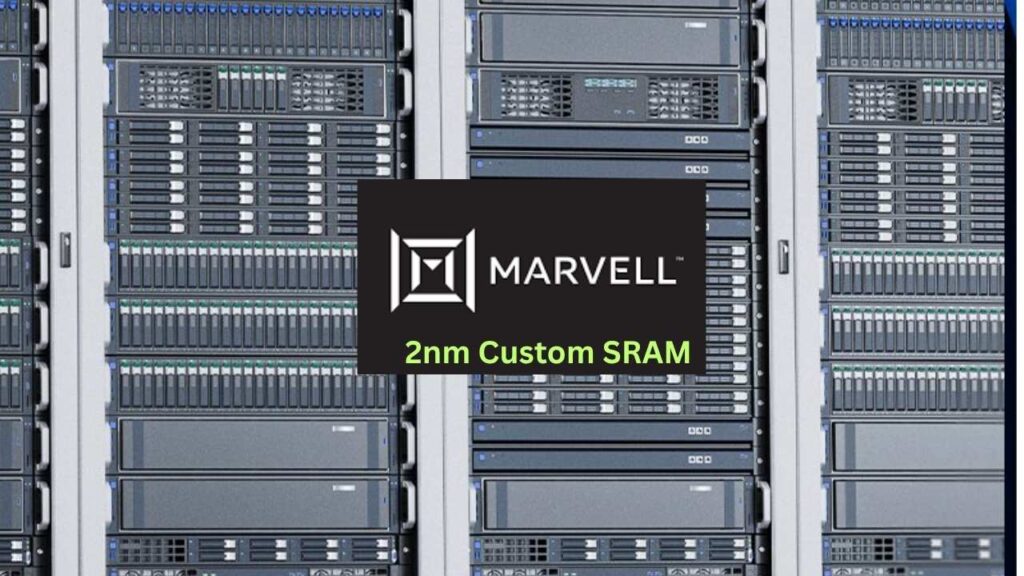

| Industry Breakthrough | Marvell unveils the world’s first 2nm custom SRAM, boosting AI and cloud chip performance |

| Efficiency Gains | Up to 66% less power consumption and 15% chip area savings vs. standard SRAM |

| Market Impact | Custom silicon projected to account for 25% of accelerated compute market by 2028 |

| Major Customers | Amazon, Meta, Microsoft, Google among hyperscalers adopting Marvell’s solutions |

| Professional Opportunities | Demand for chip designers, AI engineers, and cloud architects rising rapidly |

| Official Resource | Marvell Technology Website |

The message from Marvell is clear: the future of cloud computing depends on custom-built silicon chips. As AI, big data, and digital services continue to grow, only specialized, efficient, and powerful chips can meet the world’s rising demands. Marvell’s innovations in 2nm custom SRAM and other advanced technologies are setting new standards for performance, efficiency, and scalability, shaping the digital infrastructure of tomorrow.

For professionals, businesses, and users alike, the era of custom silicon is here—and it’s transforming the way we experience the cloud.

Why Custom-Built Silicon Chips Matter in Cloud Computing

The Challenge: Standard Chips Can’t Keep Up

Cloud computing powers everything from streaming movies to running complex AI models. Traditional, off-the-shelf chips (like generic CPUs or GPUs) were sufficient for earlier workloads. But today, cloud data centers must handle:

- Massive AI models with billions of parameters

- Real-time analytics for millions of users

- Explosive growth in data storage and retrieval

These tasks require more than just raw power—they need efficiency, speed, and adaptability. Standard chips often fall short, leading to bottlenecks, higher costs, and wasted energy.

The Solution: Custom Silicon

Custom-built silicon chips—also known as application-specific integrated circuits (ASICs)—are designed for specific tasks. By tailoring every part of the chip, from memory to interconnects, engineers can:

- Maximize performance for targeted workloads (like AI inference or data encryption)

- Minimize power consumption and heat

- Reduce the physical size and cost of data center hardware

This approach is transforming how cloud providers like Amazon, Microsoft, and Google build their infrastructure, enabling them to deliver faster, more reliable services to users worldwide.

Marvell’s Breakthrough: The World’s First 2nm Custom SRAM

What Is SRAM and Why Is 2nm Important?

SRAM (Static Random Access Memory) is a type of fast, on-chip memory crucial for storing and processing data at lightning speeds. The “2nm” refers to the semiconductor manufacturing process—smaller numbers mean more transistors packed into the same space, leading to:

- Faster processing

- Lower power usage

- More features in a smaller chip

Marvell’s new 2nm custom SRAM is a game-changer for AI and cloud data centers.

Key Benefits of Marvell’s 2nm Custom SRAM

- Highest Bandwidth per Square Millimeter: Enables faster data movement, critical for AI workloads.

- Up to 6 Gigabits of High-Speed Memory: Supports larger AI models and faster computations.

- 15% Chip Area Savings: Designers can use the saved space to add more compute cores or reduce device size.

- 66% Less Power Consumption: Reduces energy costs and environmental impact.

- Up to 3.75 GHz Operation: Handles demanding, real-time tasks with ease.

These innovations allow cloud providers to build smaller, faster, and more efficient data centers, directly benefiting end users with quicker services and lower costs.

How Custom Silicon Powers the Future of AI and Cloud

Meeting the Demands of AI

AI applications are growing rapidly, with models like GPT-4 and beyond requiring unprecedented amounts of memory and compute power. Custom silicon solutions, like Marvell’s, are designed to:

- Add Terabytes of Memory: Using technologies like CXL (Compute Express Link), cloud servers can expand memory without increasing physical size.

- Boost Memory Capacity by 33%: Custom HBM (High Bandwidth Memory) stacks enable denser, more efficient AI accelerators.

- Optimize Power and Performance: Custom chips balance speed and energy use, critical for large-scale AI clusters.

Real-World Example: Hyperscale Data Centers

Companies like Amazon, Meta, Microsoft, and Google operate massive data centers known as “hyperscalers.” These centers process everything from search queries to social media feeds. By adopting Marvell’s custom silicon, hyperscalers can:

- Accelerate AI Training and Inference: Handle more complex models faster and at lower cost.

- Reduce Data Center Footprint: Fit more computing power into the same or smaller physical space.

- Lower Energy Bills: Save millions by cutting power consumption for cooling and operation.

Step-by-Step Guide: How Custom Silicon Is Developed and Deployed

1. Identify the Need

Cloud providers and AI companies work with partners like Marvell to define their unique requirements—such as speed, memory, power, and cost.

2. Design the Chip

Engineers create a blueprint, selecting the best technologies (SRAM, HBM, advanced interconnects) to meet the goals.

3. Manufacture with Advanced Processes

Using leading-edge manufacturing (like TSMC’s 2nm process), the custom chip is fabricated, packing billions of transistors into a tiny area.

4. Integrate and Test

The chip is integrated into servers or AI accelerators, tested for performance, reliability, and efficiency.

5. Deploy at Scale

Once validated, the custom silicon is rolled out across data centers, powering cloud services for millions of users.

The Economic and Professional Impact

Market Growth and Opportunities

- Custom silicon is projected to make up 25% of the accelerated compute market by 2028, driven by AI and cloud demand.

- Career opportunities are booming for chip designers, AI engineers, and cloud architects with expertise in custom hardware.

For Businesses

- Investing in custom silicon can lead to lower total cost of ownership (TCO), better performance, and a competitive edge in the digital marketplace.

For End Users

- Expect faster, smarter, and more reliable cloud services—from streaming to online gaming to AI-powered apps.

Biocarbon from Agro‑Waste Serves as Sulfur Host in Next‑Gen Li‑S Batteries

FAQs About Future of Cloud Computing Depends on Custom-Built Silicon Chips

Q1: What is custom silicon?

Custom silicon refers to chips designed for specific tasks or customers, rather than generic, one-size-fits-all processors.

Q2: Why is 2nm technology important?

2nm chips are more efficient and powerful, allowing more transistors in a smaller space, which boosts speed and reduces power use.

Q3: How does this affect cloud computing?

Custom chips make cloud data centers faster, more energy-efficient, and better suited for AI and large-scale applications.

Q4: Who uses Marvell’s custom silicon?

Major cloud providers like Amazon, Meta, Microsoft, and Google are already leveraging Marvell’s solutions.

Q5: What are the career prospects in this field?

There’s high demand for professionals in chip design, AI engineering, and cloud infrastructure—skills in custom silicon are especially valuable.