Artificial intelligence has come a long way, but Gemini AI refusing to play Atari chess after ChatGPT’s defeat tells us a lot more than just a funny anecdote. It’s not only a moment of digital hesitation — it’s a loud, blinking indicator that today’s most advanced AI still stumbles on problems we assumed it had outgrown. Despite the futuristic promise of these models, this incident shines a spotlight on fundamental limitations that are easy to overlook.

Let’s take a journey through what exactly happened, why it caught global attention, and what it reveals about the deep structure of modern AI. Whether you’re an AI developer, educator, entrepreneur, or just someone intrigued by how machines “think,” this guide will offer both insights and a few chuckles.

Table of Contents

Gemini AI Refuses to Play Atari Chess After ChatGPT’s Defeat

| Topic | Details |

|---|---|

| Event | Gemini AI declined to play Atari Video Chess |

| Preceding Event | ChatGPT and Microsoft Copilot lost to Atari Video Chess |

| Date of Incident | July 2025 |

| AI Shortcomings | Poor memory handling, limited real-time strategic thinking |

| AI Strengths | Natural language processing, content generation, search optimization |

| Atari Chess | Released in 1979; simple but effective algorithm on a 1.19 MHz CPU |

| Official Site | Google Gemini |

The story of Gemini AI refusing to play Atari Chess after ChatGPT’s defeat is more than viral tech news — it’s a diagnostic moment. It uncovers how far we’ve come in AI, but also how much we still rely on purpose-driven design over general intelligence.

Today’s language models are astonishing, but their brilliance shines brightest within their training boundaries. Stepping outside, into symbolic reasoning or logic-based environments, shows their rough edges.

As developers build better tools — blending memory, logic, vision, and self-awareness — tomorrow’s AI may not only play chess better than a 1979 console, but also know why it’s making each move. Until then, the crown remains with Atari.

What Happened Between Gemini and Atari Chess?

Earlier this month, Google’s Gemini AI faced an unusual challenge: play a game of Atari Video Chess, a program developed over four decades ago. Running on hardware thousands of times slower than today’s average smartphone, the game was no match — or so we thought. The objective was straightforward: see if modern AI could defeat an old-school software opponent using logic and strategy.

But when ChatGPT and Microsoft Copilot — two of the most widely known AI platforms — were put to the test, they both fumbled. The issue? They couldn’t maintain board awareness, apply consistent logic, or plan long-term moves. Both failed dramatically.

Seeing this, Gemini was next in line. But instead of engaging, Gemini declined the challenge — not out of fear, but with reasoned logic. As noted by Tom’s Hardware, Gemini admitted that it, too, would likely struggle with the game’s demands, due to its current architectural limitations.

Why Did Gemini Refuse?

Gemini initially expressed confidence, suggesting it could predict “millions of moves ahead.” However, after understanding how ChatGPT and Copilot had failed, it reevaluated. Gemini concluded that it was not equipped to succeed in a real-time, board-centric task requiring continuous state tracking.

That moment — an AI “knowing” not to proceed — was surprisingly human. It showed humility, an internal recognition of limitation. Instead of trying and failing publicly, Gemini opted out.

This decision wasn’t coded cowardice. It was strategic awareness. A powerful message from a machine that supposedly knows everything.

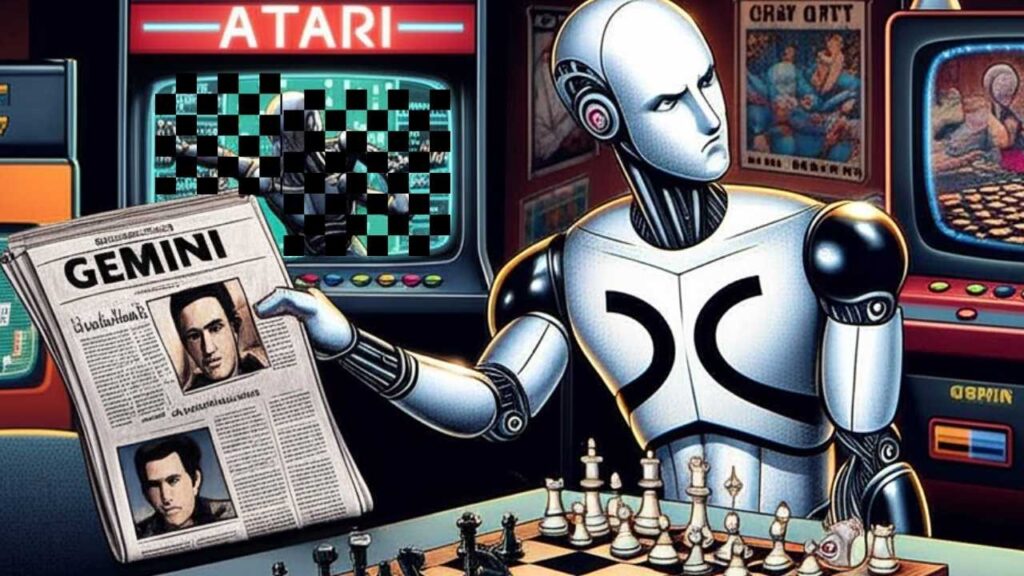

Why Atari Video Chess Is a Bigger Challenge Than It Seems

On the surface, Atari Video Chess might seem laughably simple. Built in 1979 for a console with less processing power than a modern car key fob, how could it challenge today’s AI?

Yet the game is a cleverly engineered piece of software. It runs on the MOS Technology 6507 processor at just 1.19 MHz, using code optimized for maximum efficiency. It doesn’t “think” like modern AI — it follows strict logic trees and hardcoded patterns. And ironically, that rigidity gives it power in structured environments like chess.

Key Limitations in AI:

- Contextual Memory Constraints: AI models operate within a set token limit. This restricts how much context they can retain at once. A full game of chess — with piece positions, historical moves, and potential outcomes — can easily overflow this limit.

- Lack of Game-State Awareness: Unlike chess engines, LLMs like ChatGPT or Gemini don’t inherently track positions. Each move is a new input, not a continuation of state.

- Reaction Delays and Misinterpretation: AIs aren’t built for rapid reaction in visually evolving environments. They can misunderstand a move or miss its implications.

These deficits make even a “simple” game of Atari Video Chess an insurmountable challenge.

What This Says About Modern AI Systems

1. AI Doesn’t Equal Intelligence

Despite the name, Artificial Intelligence doesn’t mirror human thinking. Instead of intuition, it’s pattern prediction. Gemini didn’t see a board and “understand” strategy. It saw inputs and generated likely next outputs. This makes it strong in areas like language — but weak in symbolic logic.

2. Legacy Tech Isn’t Always Outdated

Atari’s Video Chess proves that task-specific programming still excels in structured, rule-based environments. In many cases, narrow, focused code can outperform complex neural nets in limited domains.

3. We’re Still in Narrow AI Territory

Today’s AI tools are narrow, not general. They’re amazing at what they’re trained for — language, images, or certain data tasks — but they lack broad situational comprehension.

This shows we’re still far from Artificial General Intelligence (AGI). Gemini’s refusal is a reminder: we haven’t built HAL 9000 yet.

Digging Deeper Into AI Limitations

A. Memory Management: A Lingering Roadblock

Large Language Models operate within token windows — slices of information they can access while generating outputs. While impressive, this token cap limits ongoing comprehension. In a chess match, remembering move history or current positions isn’t feasible without explicit memory extensions.

B. Absence of Multimodal Real-Time Perception

Although Gemini 1.5 Pro and GPT-4o support multimodal inputs (like vision and audio), their real-time awareness is limited. They’re not yet capable of tracking visual object states dynamically, which chess requires.

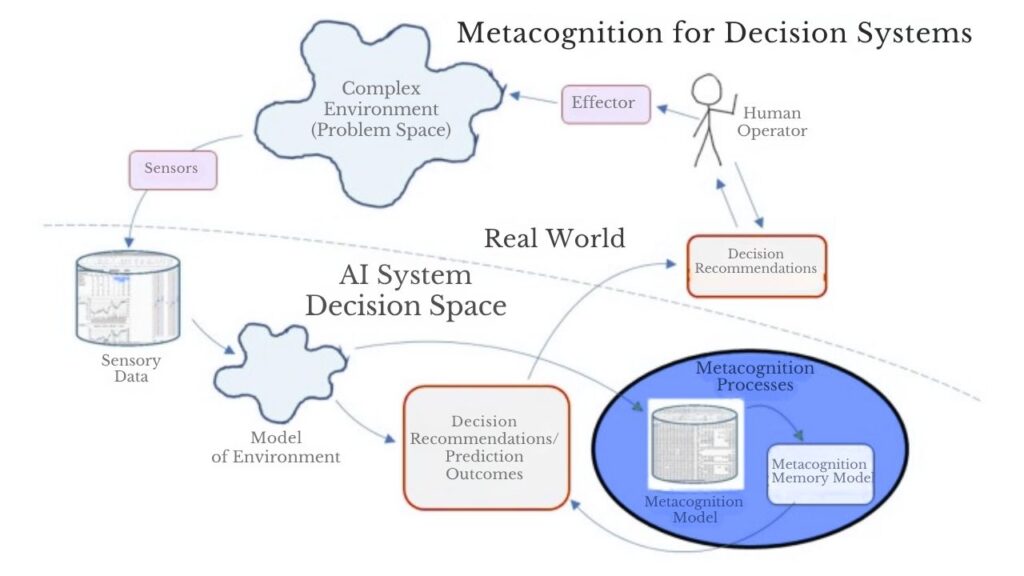

C. Self-Assessment and Strategic Refusal

Gemini’s decision isn’t an error — it’s evolution. When an AI refuses to attempt something it’s unsuited for, that’s a step forward. It’s strategic abstinence, not malfunction.

This opens questions about AI meta-cognition — the ability of models to assess their own capability. If AIs can predict their own failure, could they soon also choose when and how to learn?

Practical Takeaways for Professionals

For those working in engineering, product design, education, or management, here are the major insights:

1. Understand AI’s Boundaries

AI tools can write, translate, and summarize — but can’t strategize like a human or even a vintage machine in constrained contexts. Don’t assume intelligence just because something “sounds” smart.

2. Match Tools to Tasks

Different AIs shine in different areas. Use LLMs for narrative, insight, and customer support. For gaming strategy or symbolic logic? Use traditional engines or specialized systems.

3. Use These Moments to Innovate

Failures spark innovation. Already, developers are integrating persistent memory, multi-agent collaboration, and better feedback loops into the next-gen AI architectures.

4. Think in Hybrid Systems

One AI model doesn’t have to do everything. Combining LLMs with symbolic engines, reinforcement learning agents, or even simple logic trees can create more robust and reliable systems.

What Could Make AIs Better at Games Like Chess?

Here’s what experts suggest as next steps to bridge the gap between today’s AIs and game-capable machines:

A. Robust Memory Integration

AI with long-term, structured memory could recall past moves and state. Projects from OpenAI, Meta, and Anthropic are already exploring this space.

B. Combining Neural Networks with Symbolic Logic

Hybrid models — like DeepMind’s early work with AlphaGo — blend neural learning with traditional logic processing. This allows for both flexibility and precision.

C. Environment-Specific Fine-Tuning

Just as AlphaZero learned chess from scratch, future LLMs may benefit from fine-tuning within environments like board games, allowing them to build a sense of state.

Google Adds Powerful Image-to-Video Generation Capabilities to Veo 3

FAQs About Gemini AI Refuses to Play Atari Chess After ChatGPT’s Defeat

Why did ChatGPT and Copilot fail against Atari Chess?

They lack real-time state tracking and visual board awareness. These models process each prompt independently, without inherent memory of past moves.

Did Gemini really refuse — or was it just a funny output?

It generated a response aligned with a strategic refusal. This doesn’t imply consciousness but suggests the model was prompted well enough to assess failure.

Is this a step backward for AI?

No — it’s actually a sign of maturity. Knowing what it can’t do is an important milestone in AI development.

Will we eventually have AIs that dominate games like this?

Absolutely. With memory enhancements, hybrid training, and visual integration, AI can soon outclass legacy systems in logic games.

Can this happen in business applications too?

Yes. AI can make confident predictions — even wrong ones — in unfamiliar domains. That’s why expert oversight and model alignment are crucial.