Google’s AI bug hunter, named Big Sleep, has recently made waves in the cybersecurity world after autonomously discovering and reporting 20 critical security vulnerabilities across popular open-source software projects. This startling achievement demonstrates the growing power of artificial intelligence (AI) not only in improving software development but also in strengthening our digital defenses.

Big Sleep is the product of advanced research collaboration between Google’s AI division DeepMind and its elite hacker team, Project Zero. What makes Big Sleep stand apart is its ability to not just identify potential vulnerabilities but to independently reproduce and verify them without requiring human intervention—though expert oversight remains crucial to confirm and report findings responsibly.

Table of Contents

Google’s AI Bug Hunter Just Uncovered 20 Shocking Security Flaws

| Topic | Details |

|---|---|

| AI Bug Hunter Name | Big Sleep |

| Developers | Google DeepMind & Project Zero |

| Discovered Vulnerabilities | 20 significant flaws in open-source software such as FFmpeg, ImageMagick, SQLite, etc. |

| Distinctive Feature | Autonomous vulnerability detection and reproduction without human assistance |

| Real-World Impact | Prevented imminent zero-day exploit attacks |

| Challenges | Handling false positives known as “AI hallucinations” |

| Industry Significance | Accelerates security testing and opens new approaches in vulnerability research |

| Official Reference | Google Project Zero blog |

Google’s AI bug hunter Big Sleep represents a landmark achievement by autonomously discovering 20 critical vulnerabilities in prominent open-source projects, including FFmpeg, ImageMagick, and SQLite. This milestone highlights how artificial intelligence is transforming cybersecurity by enabling faster, deeper, and more scalable vulnerability detection.

While challenges such as false positives and the need for human oversight remain, the partnership between AI and expert researchers is reshaping how software is secured. For cybersecurity professionals and organizations worldwide, embracing AI-powered tooling like Big Sleep will be vital to defend against ever-more sophisticated digital threats.

The convergence of human expertise and artificial intelligence opens new horizons in protecting our increasingly digital world, making this an exciting area to watch and engage with for years to come.

What Is an AI Bug Hunter and Why Is It Important?

Digital software is at the heart of modern life—from social media and online banking to healthcare systems and transportation networks. Unfortunately, the complexity and scale of contemporary software projects make them vulnerable to security flaws that hackers can exploit.

An AI bug hunter like Big Sleep uses machine learning models trained on vast amounts of code and cybersecurity knowledge to autonomously scan, analyze, and detect vulnerabilities rapidly. By automating this process, organizations can stay ahead of hackers who constantly seek new weaknesses to exploit.

Think of it this way: finding security bugs in millions of lines of code is like finding a needle in a gigantic haystack. Human security researchers are experts, but they can get overwhelmed by the volume and complexity. Big Sleep acts like a supercharged magnifying glass, scanning through thousands of haystacks simultaneously and highlighting those needles faster and more accurately than manual reviews alone.

The Significance for Open Source Software

Open-source projects like FFmpeg (used in video processing), ImageMagick (image editing suite), and SQLite (database software) form foundational technology on which countless applications depend. This widespread usage makes vulnerabilities in such software especially dangerous: a bug in one open-source library could affect thousands of applications and millions of users.

By finding 20 vulnerabilities in such projects, Google’s Big Sleep showcased how AI can protect widespread digital infrastructure that businesses and consumers rely on daily.

How Does Big Sleep Work? A Closer Look

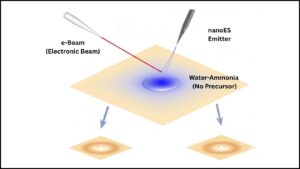

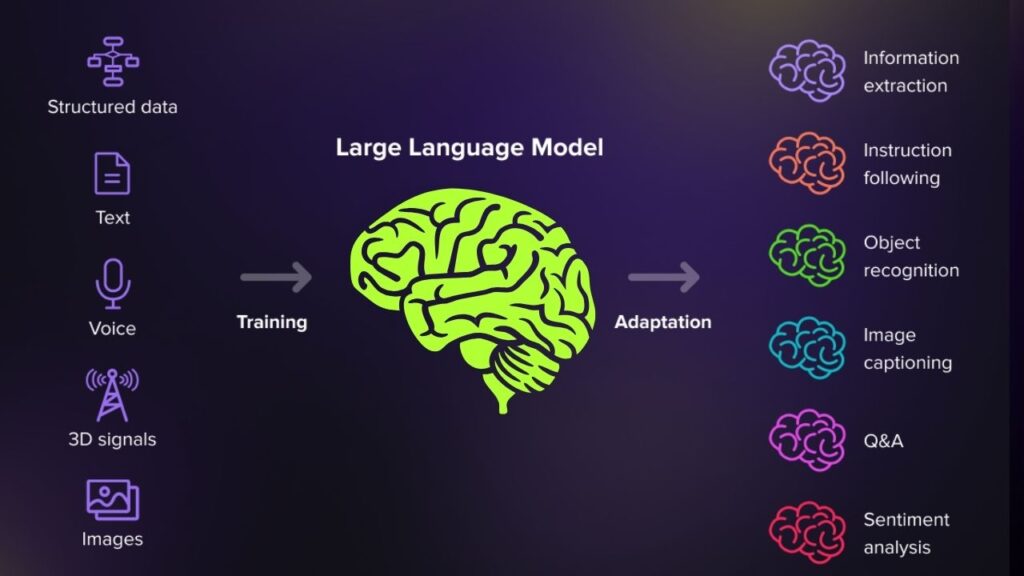

Big Sleep is powered by large language models (LLMs) — sophisticated AI models that excel at understanding and generating human language. Unlike typical chatbots that generate text, Big Sleep is trained specifically on programming languages, enabling it to “read” code like a seasoned developer.

Here’s the process broken down:

- Code Ingestion: Big Sleep automatically scans large code repositories of open-source projects.

- Vulnerability Hunting: It analyzes code patterns and structures to detect risky operations or inconsistencies resembling known security issues.

- Exploit Reproduction: When a possible problem is detected, the AI attempts to reproduce the vulnerability by simulating an exploit. This step confirms whether the flaw could be used maliciously.

- Report Generation: Once confirmed, Big Sleep drafts detailed technical vulnerability reports.

- Human Expert Review: Google security researchers review and validate these reports for accuracy and prioritize the vulnerabilities for disclosure and patching.

A Real-World Success Story: Stopping a Zero-Day Attack

Among the 20 flaws found, one vulnerability in SQLite—referenced as CVE-2025-6965—stood out. This bug was already being actively exploited by cybercriminals planning sophisticated attacks. Big Sleep’s autonomous detection enabled timely intervention, preventing potentially massive data breaches affecting millions of users worldwide.

This example marks a milestone: AI tools defending us by catching sophisticated attacks before they can be launched.

Why AI Bug Hunting Is Revolutionizing Cybersecurity

Faster Analysis at Unprecedented Scale

Traditional bug hunting demands painstaking code reviews, vulnerability scanning, and manual testing. As software grows in size and complexity, these methods become slower and less practical. AI algorithms like Big Sleep can analyze entire codebases within minutes or hours—tasks that might take human teams months.

Smarter Detection Through AI Learning

Big Sleep learns from previous software vulnerabilities, adapting to detect never-before-seen flaws. Unlike rule-based scanners, AI can generalize and detect subtle patterns that usually fool traditional tools, amplifying security coverage.

Cost Reduction and Resource Optimization

AI automation offloads routine vulnerability detection from security engineers, letting them concentrate on more complex threat hunting and incident response. This results in better utilization of scarce cybersecurity expertise and budget.

Improving Accuracy By Eliminating False Alarms

False positives plague traditional scanners, overwhelming developers with too many faulty alerts. Although AI tools are not perfect and sometimes produce “hallucinations,” ongoing improvements and human oversight help maintain accuracy and reduce unnecessary noise.

Challenges and Ethical Considerations

While the potential of AI bug hunters is immense, several challenges deserve attention:

- False Positives and Hallucinations: AI may occasionally flag harmless code as risky, demanding human analysts’ intervention to sort real threats from mirages.

- Dependence on Human Expertise: AI cannot fully replace expert judgment; it complements but doesn’t substitute human experience in threat assessment.

- Privacy and Security Risks: AI scanning involves analyzing codebases, potentially exposing sensitive information. Responsible deployment must include privacy safeguards and strict data handling policies.

- Potential Misuse: AI tools could be weaponized by malicious actors themselves, raising ethical issues requiring industry governance and regulation.

Google and leaders in AI emphasize transparency and responsible AI practices to meet these challenges head-on.

Practical Guide: How Organizations Can Leverage AI Bug Hunters

Step 1: Choose the Right AI Security Tools

Research and trial AI-powered vulnerability scanners that have proven their effectiveness. Google’s Big Sleep and others like RunSybil and XBOW are examples worth exploring.

Step 2: Integrate AI into Security Processes

Incorporate AI reports into your routine security audits, continuous integration pipelines, and penetration testing workflows. Use AI as a valuable assistant to manual reviews.

Step 3: Train Teams to Interpret AI Findings

Equip your security analysts and developers to assess AI-generated reports effectively. Learn to quickly identify false positives, validate real vulnerabilities, and prioritize remediation based on risk.

Step 4: Provide Feedback to Improve AI Models

Work with AI tool developers by sharing feedback and tailored data to reduce inaccuracies and adapt the AI to your unique software environment.

Step 5: Stay Informed on the Latest AI and Security Trends

Follow key sources like Google Project Zero, MITRE’s CVE database, cybersecurity conferences, and publications from OWASP and NIST for ongoing updates and best practices.

Google to Shut Down Inactive goo.gl Links in August 2025 — What You Need to Know and Do

Google’s New AI Age Verification System: An In-Depth Guide to Protecting Minors Online

Google’s CO₂ Battery Could Revolutionize Green Energy — Here’s How It Works

FAQs About Google’s AI Bug Hunter Just Uncovered 20 Shocking Security Flaws

Q1: What types of software does Big Sleep target?

Big Sleep focuses primarily on open-source software but the approach can extend to proprietary codebases with proper access.

Q2: How fast can Big Sleep scan a codebase?

Big Sleep can scan and analyze large code repositories in hours or even minutes, depending on size and complexity.

Q3: Will AI bug hunters replace human security experts?

No. AI enhances human capabilities but expert oversight remains essential to validate and contextualize findings.

Q4: Are the vulnerabilities found publicly disclosed?

Yes, after proper patches are developed and deployed. Responsible disclosure is standard to prevent exploitation.

Q5: How secure is AI scanning? Does it expose sensitive code?

Security teams must apply strict protocols and leverage secure environments to protect code privacy during AI analysis.

Exploring Further: The Future of AI in Cybersecurity

As AI models like Big Sleep continue to evolve, we can expect even more sophisticated capabilities:

- Predictive Vulnerability Assessment: Using historical data to forecast where bugs might arise before code is even written.

- Automated Patch Generation: AI could assist not only in finding but also in fixing vulnerabilities rapidly.

- Collaboration Between Human and Machine: Augmented intelligence will become the norm, combining complementary strengths.

- Cross-Industry Security Enhancements: Broader application across IoT, healthcare, finance, and government to safeguard critical infrastructures.

The journey toward fully harnessing AI’s potential in cybersecurity is ongoing, but Big Sleep’s success is a landmark step signaling a more secure digital future.