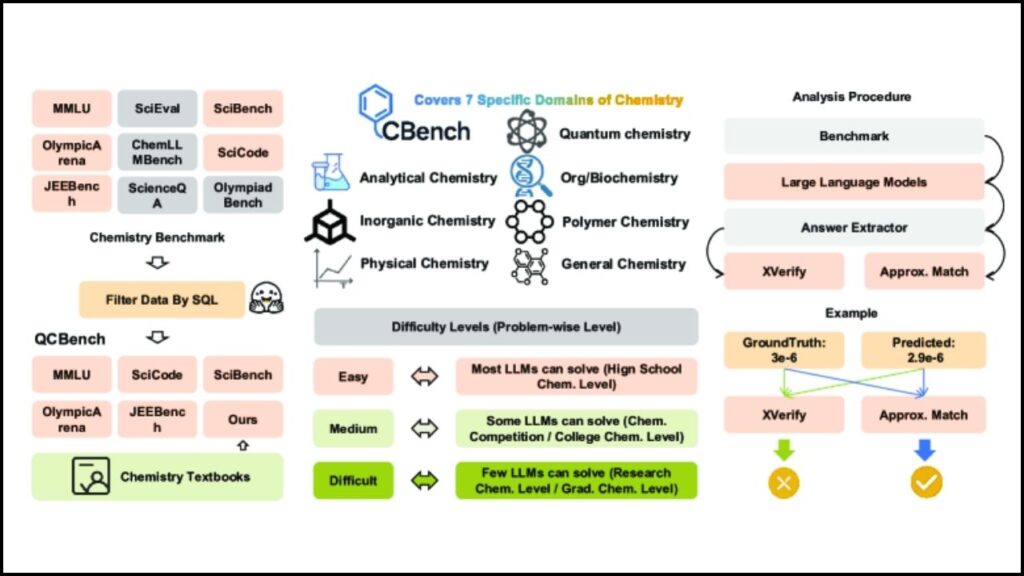

When we talk about advanced artificial intelligence (AI) models today, one of the toughest challenges they face is step-by-step numerical and quantitative reasoning — especially in highly technical fields like chemistry. To rigorously test how well AI can handle such tasks in chemistry, researchers recently introduced the QCBench benchmark, a comprehensive assessment tool that evaluates AI’s ability to carry out complex, precise calculations stepwise, just like a human chemist would.

Understanding this breakthrough is important not only for AI researchers and chemists but for everyone curious about how AI is growing smarter in specialized scientific fields. This article will guide you through what QCBench is, why it matters, and the practical insights it reveals about today’s AI models.

Table of Contents

What is QCBench?

QCBench stands for Quantitative Chemistry Benchmark. It is a carefully designed test suite comprising 350 chemistry problems spread over seven major subfields of chemistry:

- Analytical Chemistry

- Bio/Organic Chemistry

- General Chemistry

- Inorganic Chemistry

- Physical Chemistry

- Polymer Chemistry

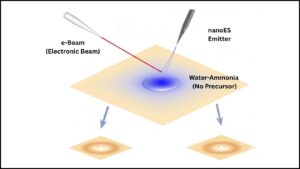

- Quantum Chemistry

These problems vary from basic to intermediate to expert levels, making the test a deep dive into how well AI models handle real-world, step-by-step calculations rather than memorizing facts.

Why Step-by-Step Reasoning Matters in Chemistry AI

In chemistry, many problems revolve around formulas, constants, equations, and multiple computational steps — like predicting reaction outcomes, calculating equilibrium concentrations, or estimating energy changes. Solving such problems requires logical, stepwise reasoning and mathematical precision.

Currently, many AI language models — while fluent at natural language — struggle when asked to perform multi-step calculations accurately. The QCBench test aims to highlight this gap, providing a structured way to measure the true quantitative reasoning capabilities of AI models.

New QCBench Test Reveals Which AI Models Truly Excel

| Aspect | Details |

|---|---|

| Number of Problems | 350 |

| Chemistry Subfields Tested | Analytical, Bio/Organic, General, Inorganic, Physical, Polymer, Quantum |

| Difficulty Levels | Basic, Intermediate, Expert |

| AI Models Evaluated | 19 Large Language Models (LLMs), including Grok-3 and DeepSeek-R1 |

| Top Performing Models | Grok-3 (overall best performer), DeepSeek-R1 (best open-source) |

| Performance Trend | Accuracy declines as problem complexity increases |

| Challenging Fields | Analytical and Polymer Chemistry |

| Verification Gap | Strict answer checks sometimes penalize correct but non-standard solutions |

| Official Study Link | QCBench on Arxiv |

QCBench represents a major step forward in understanding and enhancing AI models’ step-by-step quantitative reasoning in chemistry. Unlike surface-level language tests, this benchmark scrutinizes AI’s true computational skills across real-world chemical problems. The results underline that while AI models are improving, only a select few, like Grok-3 and DeepSeek-R1, consistently excel at complex chemistry reasoning.

For anyone working at the intersection of AI and chemistry — from researchers to industrial professionals — QCBench provides a powerful tool to evaluate and push the boundaries of AI capability, moving toward more reliable and scientifically accurate intelligent systems.

How QCBench Was Built

The research team behind QCBench includes experts from the Shanghai Artificial Intelligence Laboratory and several top Chinese universities. They carefully curated problems from existing chemistry benchmarks and supplemented them with new problems crafted by chemistry Ph.D. students and senior experts. This dual approach ensured both novelty and rigor, making QCBench a trustworthy standard for AI evaluation.

Each problem in QCBench demands stepwise numerical calculations tied tightly to chemical principles — such as applying Gibbs free energy formulas to predict reaction spontaneity or computing polymer lengths based on reactant concentrations.

What QCBench Reveals About AI Models

When applied to 19 leading large language models, QCBench demonstrated several important insights:

1. Fluency vs. Accuracy Gap

Many models can generate convincing, fluent explanations or answers — but their calculation accuracy falls short. Even sophisticated LLMs sometimes produce incorrect final answers despite making it seem like they have understood the problem fully.

2. Complexity Affects Performance

Performance consistently declines as the problems become harder, indicating that AI struggles particularly with expert-level tasks that require deeper reasoning and more detailed math.

3. Size Isn’t Everything

Larger models with more parameters are not automatically better at these quantitative reasoning tasks. For instance, Grok-3 outperformed bigger models like Grok-4 by focusing on precision and efficient reasoning.

4. Specialized Strengths

Some models show strengths in particular chemistry fields or levels of difficulty. For example, Quantum Chemistry tasks, though complex, received relatively higher accuracy rates than other subfields.

5. Verification Gap Mystery

Interestingly, the strict evaluation protocols sometimes penalized correct but differently formatted answers, exposing a “verification gap.” This means evaluation tools themselves must evolve to fairly assess diverse reasoning methods.

Practical Advice for AI Researchers and Chemists

If you are building or choosing AI tools for chemistry applications, here are some takeaways:

- Look beyond fluency: Ensure your AI’s numerical and logical accuracy is validated through domain-specific tests, like QCBench.

- Don’t rely only on model size: Efficiency and training focused on quantitative reasoning matter as much, if not more.

- Use specialized models for specific fields: Some AI perform better in physical or quantum chemistry, so selecting models aligned to your needs improves outcomes.

- Stay updated with benchmarks: Tools like QCBench help track AI progress and can inform model selection and improvement strategies.

- Combine AI and human expertise: AI can assist but shouldn’t replace expert verification, especially for complex scientific computations.

A Stepwise Guide to Understanding AI Chemistry Reasoning with QCBench

Step 1: Understand the Problem Domain

Recognize that chemistry problems often require sequential computation steps interlaced with chemical theory.

Step 2: Prepare AI Models with Domain Knowledge

Fine-tuning or training models specifically in chemistry enhances their ability to handle formulas and constants correctly.

Step 3: Test Using Benchmarks Like QCBench

Use QCBench to evaluate AI models on a wide array of real-world chemistry problems of varying difficulty.

Step 4: Analyze Performance on Subfields and Difficulty Levels

Identify which types of problems or chemistry areas your AI model struggles with for targeted improvement.

Step 5: Address the Verification Gap

Design evaluation methods that accept different but correct solution formats, improving fairness and trust in AI assessments.

Step 6: Iterate to Improve

Use feedback from benchmarks to refine your AI’s reasoning, focusing on accuracy and stepwise logic.

SuperAlgorithm.ai Breaks Big Tech’s AI Monopoly: A New Era in Global Artificial Intelligence

AI Meets Materials Science: How Machine Learning Is Accelerating Scientific Discovery

FAQs About New QCBench Test Reveals Which AI Models Truly Excel

What makes QCBench different from other AI test frameworks?

QCBench focuses specifically on quantitative, step-by-step problem-solving in chemistry, unlike many tests that emphasize language fluency or memorized facts. It covers seven distinct chemical subfields, providing a thorough and domain-oriented evaluation.

Which AI models performed best on QCBench?

The Grok-3 model emerged as the overall strongest performer, especially excelling on medium and hard problems, while the open-source DeepSeek-R1 also showed impressive capabilities.

Why do AI models struggle with complex chemistry problems?

These problems require multiple precise calculations, applying scientific formulas correctly, which many AI models, trained mostly on language data, are not yet optimally equipped to handle.

How can AI researchers improve their models’ performance?

Focusing on domain-specific fine-tuning using datasets like QCBench, encouraging structured stepwise reasoning, and evolving evaluation criteria to handle diverse valid answers can enhance model accuracy.