Open-source AI models have revolutionized the way artificial intelligence is developed and shared across the globe. But what many don’t realize is that these openly available AI systems are quietly consuming far more computing power than their closed-source counterparts—sometimes by several times as much. This hidden truth about resource use has big implications for costs, energy consumption, and the future of sustainable AI development.

In this article, we’ll explore why open-source AI models are more resource-intensive, what that means for energy use and costs, and practical steps being taken to address this challenge. Whether you’re a tech professional, AI enthusiast, or just curious about the growing AI landscape, you’ll find this breakdown clear, insightful, and actionable.

Table of Contents

Open-Source AI Models Are Secretly Hogging Way More Computing Power

| Aspect | Details |

|---|---|

| Computing Power Usage | Open-source AI models can consume 1.5 to 10 times more computing tokens than closed models |

| Energy Consumption Estimate | Typical AI queries (like ChatGPT) use ~0.3 watt-hours, though open models may use more |

| Environmental Impact | AI power demand expected to rise sharply, contributing significantly to global emissions |

| Industry Innovation | New smaller, efficient models and hardware aim to reduce AI energy footprint |

| Practical Advice | Use optimized smaller models, improve transparency, and adopt energy-saving AI design |

| Reference | For more info, visit IBM Think – AI and Energy Efficiency |

Open-source AI models have empowered a new wave of innovation by making AI technology accessible to all. But the hidden cost is that these models often consume much more computing power and energy than their closed-source counterparts. This has important implications for operational costs, environmental sustainability, and the broader adoption of AI technologies.

The good news is the AI industry is already tackling this energy challenge head-on—through smarter model design, hardware innovation, and improved transparency. By choosing the right AI tools and optimizing their use, businesses and developers can enjoy the benefits of open-source AI without breaking the bank or harming the environment.

As AI continues to evolve, balancing openness with efficiency will be key to ensuring a sustainable and equitable technological future.

Understanding Open-Source AI and Computing Power Usage

Open-source AI models are built with transparency and accessibility in mind. Anyone can view, modify, and use these models freely, driving innovation and collaboration worldwide. However, a recent study shows that the open availability of model weights and architectures has a downside—it often correlates with much higher computing demand.

When tested on similar questions and tasks, open-source models sometimes use up to 10 times more tokens (units of text processed) to arrive at an answer compared to closed-source giants like Google’s Bard or OpenAI’s ChatGPT. Because the number of tokens directly relates to the amount of computing power used, this difference means open models silently “hog” more server resources.

Why does this happen? Open models tend to generate longer, sometimes redundant reasoning steps especially for simple queries. This is partly because they are less optimized and lack the sophisticated fine-tuning done by closed systems with massive R&D budgets. While closed AI companies often focus on efficiency to reduce costs, open models prioritize accessibility and adaptability, which can inadvertently lead to less computational efficiency.

How Much Energy Does AI Actually Use?

To put things in perspective, typical queries to large AI models consume about 0.3 watt-hours of electricity, roughly the power a small LED bulb uses in a few minutes. But more complex queries requiring extensive reasoning can push this higher—from 2.5 to 40 watt-hours. Open-source AI, with its higher token usage, may fall on the upper end of this scale.

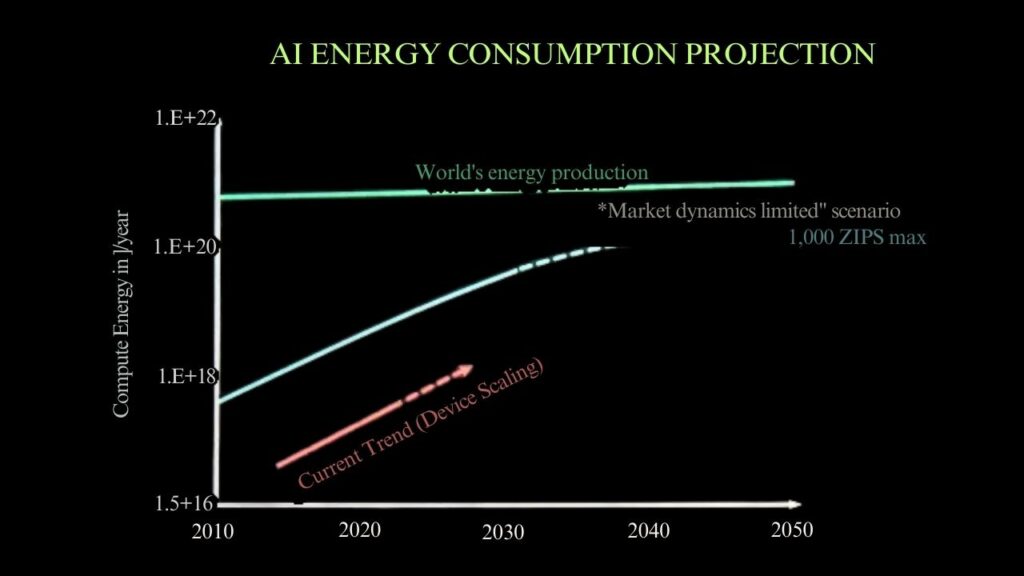

Still, at a global scale, the energy consumption of AI models is substantial. According to projections, the power needed for running AI data centers could grow to consume 10% of US electricity by 2030 if no efficiency improvements occur. This underscores why resource efficiency in AI is a critical environmental concern.

Why Does This Matter? The Environmental and Cost Impact

AI’s hefty computing demands affect both financial and environmental arenas.

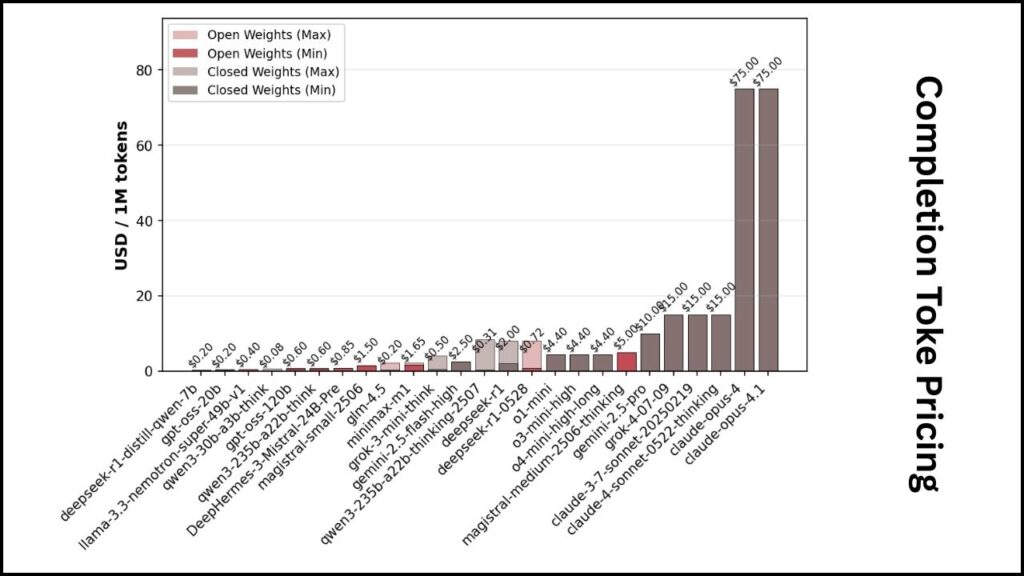

- Cost: Though open-source models often have lower per-token costs, the volume of tokens used leads to higher total querying costs. For companies or individuals hosting their own open models, this can translate into more expensive cloud fees and hardware requirements.

- Environment: Data centers require massive electricity, much of which currently comes from non-renewable sources. AI’s surging demand adds to global greenhouse gas emissions. For example, research indicates AI-related electricity consumption could increase global greenhouse gases by 1.7 gigatons between 2025 and 2030.

Industry Efforts Toward Sustainable AI

The good news? Industry leaders, researchers, and universities worldwide are actively working to create smaller, more efficient AI models that maintain accuracy while slashing energy use.

Some promising approaches include:

- Model optimization: Reducing unnecessary calculations, pruning redundant neural network paths, and improving algorithm efficiency.

- Hardware innovations: Designing chips specifically for AI workloads that consume less power per operation.

- Transparency and benchmarking: Open sharing of energy use data encourages awareness and competitive energy-conscious design improvements.

- Practical AI configuration: Limiting the decimal precision used in computations, shortening answer lengths, and using smaller models for simple tasks.

For instance, IBM’s Telum II Processor and IBM Spyre Accelerator—set to launch in 2025—target reducing AI-related energy and data center footprints. Similarly, research from University College London (UCL) shows certain model tweaks can reduce AI energy needs by up to 90% without hurting user experience.

A Practical Guide: How to Navigate the Open-Source AI Power Challenge

If you are considering deploying or using open-source AI, here’s a clear step-by-step approach:

1. Choose the Right Model for Your Task

Not all models are created equal. For many business cases, smaller, task-specific models are more energy-efficient and cost-effective than large generalist ones.

2. Optimize Your AI Workflows

Use pruning and quantization techniques to reduce model size and computing requirements. This lowers latency and power consumption.

3. Monitor Token Usage Carefully

Track the number of tokens your application processes and set limits where appropriate to avoid unnecessarily long responses.

4. Consider Hybrid Approaches

Use closed, efficient models for simple tasks and open-source models when greater flexibility or customization is needed.

5. Advocate for Transparency

Encourage open sharing of energy consumption metrics to foster industry-wide improvements and awareness around AI’s environmental impact.

Meta Launches New AGI Lab to Dominate the Future of Artificial Intelligence

Meta AI Edges Toward Superintelligence: A New Era of Personal Empowerment

New AI Strategy Brings High-Powered Intelligence to Low-Energy Edge Devices

FAQs About Open-Source AI Models Are Secretly Hogging Way More Computing Power

Q1: Why are open-source AI models less efficient?

Open models prioritize accessibility and customization, which can lead to less optimization and longer output sequences, hence using more compute per query.

Q2: Can the energy cost of AI queries be reduced?

Yes. Through hardware improvements, smaller models, algorithmic adjustments, and efficient cloud infrastructure, AI’s energy demands can be dramatically lowered.

Q3: Does using open-source AI harm the environment more?

If not optimized, open-source AI can increase electricity consumption and carbon footprints compared to more efficient closed models, but ongoing innovation is addressing this.

Q4: How can businesses reduce AI-related costs?

They can select energy-efficient models, optimize code and queries, limit heavy usage, and use cloud services that focus on sustainability.

Q5: What is the future of sustainable AI?

A future where AI is designed “for sustainability” with energy efficiency built in, using cutting-edge hardware, smarter algorithms, and transparent reporting of energy use.