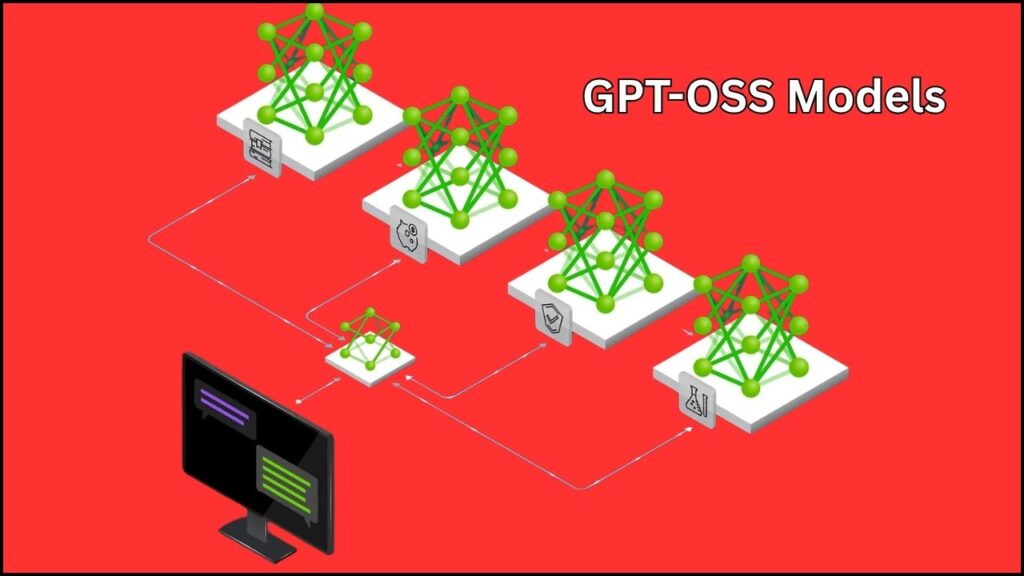

OpenAI has introduced its latest gpt-oss models, designed to run blazing fast locally on NVIDIA RTX GPUs without requiring any cloud access. This means users—from hobbyists to professionals—can now leverage state-of-the-art large language models (LLMs) directly on their own machines equipped with RTX or RTX PRO graphics cards. This breakthrough delivers ultra-fast AI performance, enhanced privacy by keeping data local, and significant cost savings by eliminating cloud computing fees.

The collaboration between OpenAI and NVIDIA democratizes access to powerful AI models, with optimized versions tailored for both consumer-grade and professional-grade GPUs. These models also support extraordinarily large context windows and cutting-edge precision formats, enabling sophisticated AI tasks that previously demanded cloud infrastructure.

Table of Contents

OpenAI’s Latest Models Now Run Blazing Fast on NVIDIA RTX GPUs

| Feature | Details |

|---|---|

| Models Available | gpt-oss-20b (requires ≥16GB VRAM) and gpt-oss-120b (requires 80GB VRAM like NVIDIA H100) |

| Performance | Up to 256 tokens/sec on RTX 5090; optimized for both desktop and datacenter environments |

| Context Window | Supports up to 131,072 tokens for multi-document understanding and complex tasks |

| Precision Format | MXFP4 mixed precision improves speed without sacrificing output quality |

| Optimization Techniques | INT4/INT8 quantization, Flash Attention, CUDA Graphs, and Low-Rank Adaptation (LoRA) |

| Run Environment | Local execution on NVIDIA GeForce RTX and RTX PRO GPUs; no cloud needed |

| Benefits | Superior response speed, data privacy, lower costs, offline availability |

| Official Resource | OpenAI gpt-oss announcement |

OpenAI’s gpt-oss models, optimized to run blazing fast on NVIDIA RTX GPUs locally, represent a landmark shift in AI accessibility. Whether you are a developer, researcher, or content creator, these models offer cloud-level AI power—right on your desktop or workstation.

Thanks to NVIDIA’s advanced GPU architecture and software stack, coupled with OpenAI’s open-weight, high-performance models, users can now experience rapid AI inference, enhanced data privacy, and dramatically reduced costs without reliance on external cloud services. This breakthrough makes powerful AI more accessible, flexible, and secure than ever before.

What Are the gpt-oss Models?

OpenAI’s gpt-oss models are a new family of open-weight large language models optimized for local execution on NVIDIA RTX GPUs. There are two main variants:

- gpt-oss-20b: Tailored for consumer hardware with 16GB or more VRAM (e.g., RTX 4090, RTX 5090). It achieves speeds of around 250–256 tokens per second, enabling responsive, real-time interactions.

- gpt-oss-120b: Designed for professional workstations with massive 80GB GPUs such as NVIDIA H100 or A100. This model is capable of handling very large-scale workloads and complex reasoning at datacenter-grade throughput.

Both models uniquely support extremely large context windows, holding up to 131,072 tokens, which is many times larger than conventional models. This allows for advanced use cases like deep document analysis, multi-step reasoning, and large code base generation.

Why NVIDIA RTX GPUs Are Ideal for Running gpt-oss

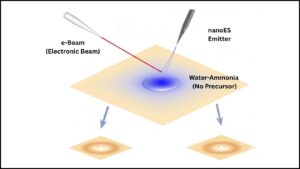

NVIDIA’s RTX GPUs excel at accelerating deep learning inference for several reasons:

- Large Video Memory (VRAM): Sufficient memory capacity accommodates the large model weights and extended context windows of gpt-oss models.

- CUDA-enabled Acceleration: NVIDIA’s CUDA technology enables massively parallel matrix multiplication and other tensor operations critical to neural networks.

- Tensor Cores & Mixed Precision: Specially designed hardware supports precision formats like MXFP4, which increase speed while maintaining output quality.

- Advanced AI Software Stack: Tools such as TensorRT-LLM optimize latency and throughput for AI workloads running locally, leveraging CUDA Graphs and Flash Attention for further speed enhancements.

This hardware-software synergy allows local PCs and workstations to deliver cloud-grade AI inference performance.

Practical Benefits of Using gpt-oss Locally on RTX GPUs

Running these highly optimized AI models locally opens many practical advantages:

- Significant Speed Improvements: Local inference avoids network delays, providing nearly instantaneous responses. On an RTX 5090, gpt-oss-20b can generate up to 256 tokens per second, enabling fluid conversational AI and real-time applications.

- Enhanced Privacy and Security: As data never leaves your machine, sensitive information stays secure, critical for industries needing confidential data handling.

- Cost-Effective AI Usage: Avoid ongoing cloud compute charges by investing once in the right GPU hardware. This reduces operating expenses for AI workloads dramatically.

- Offline Operation: AI tools remain accessible in environments with poor or no internet connectivity, important for fieldwork or secure facilities.

- Customization and Fine-tuning: Local access to model weights allows developers to fine-tune models efficiently using methods like Low-Rank Adaptation (LoRA), adapting AI behavior to specific tasks or domains.

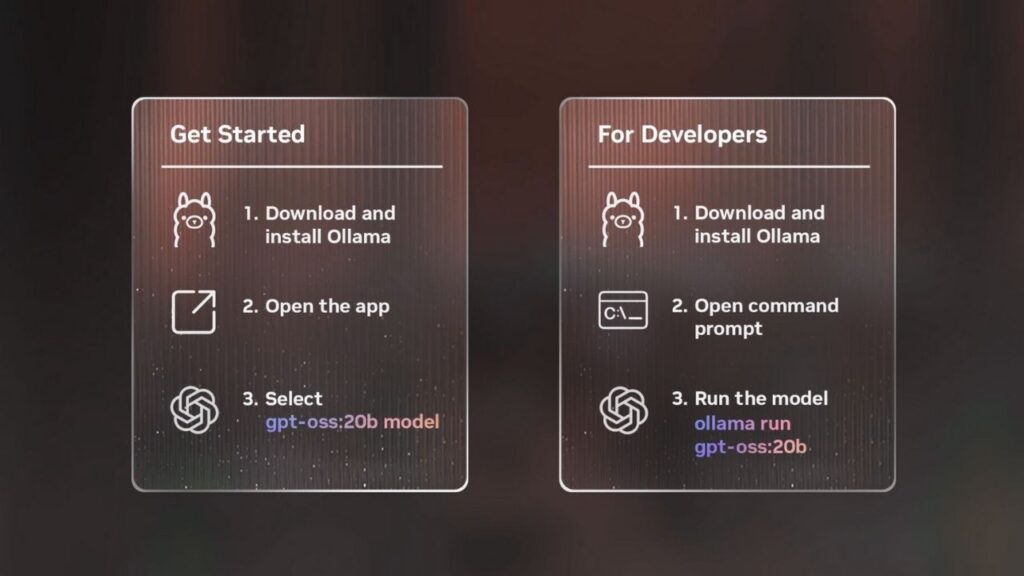

How to Get Started Running gpt-oss Models Locally

If you are interested in deploying these models on your NVIDIA RTX GPU setup, here is a straightforward approach:

Step 1: Check Your Hardware Compatibility

- For the smaller gpt-oss-20b, a consumer GPU with 16GB or higher VRAM such as the NVIDIA RTX 4090 or RTX 5090 is required.

- For the more powerful gpt-oss-120b, a professional GPU with 80GB VRAM like the NVIDIA H100 or A100 is necessary.

Step 2: Install the Required Software

- Download and install Ollama, a user-friendly application that bundles these models and provides both UI and command-line interfaces.

- Alternatively, advanced users may explore frameworks like llama.cpp or NVIDIA TensorRT-LLM for customized deployments.

Step 3: Download and Run the Models

Run simple commands to launch the models locally, for example:

textollama run gpt-oss:20b

ollama run gpt-oss:120b

Models will load onto your GPU, allowing you to input prompts or integrate into applications such as chatbots or research tools.

Step 4: Explore Optimization Features

- Apply techniques like INT4/INT8 quantization to reduce memory footprint with negligible accuracy loss.

- Use Flash Attention to speed up handling of long context windows.

- Leverage CUDA Graphs to minimize latency.

- Implement Low-Rank Adaptation (LoRA) to fine-tune models efficiently for specialized needs.

These options make the models flexible and adaptable without demanding heavy additional infrastructure.

Real-World Use Cases for gpt-oss on RTX GPUs

- Academic Researchers: Process and analyze large document sets or datasets locally without wait times.

- Software Developers: Build AI assistants or code-generation tools that run fully on desktop or workstation.

- Content Creators: Generate articles, marketing copy, and scripts quickly with complete data privacy.

- Enterprises: Deploy AI solutions privately on internal networks, ensuring compliance and data control.

The combination of powerful hardware and open-weight models brings AI capabilities previously reserved for cloud-only deployments into the hands of end-users everywhere.

SuperAlgorithm.ai Breaks Big Tech’s AI Monopoly: A New Era in Global Artificial Intelligence

Meta Launches New AGI Lab to Dominate the Future of Artificial Intelligence

New AI Strategy Brings High-Powered Intelligence to Low-Energy Edge Devices

FAQs About OpenAI’s Latest Models Now Run Blazing Fast on NVIDIA RTX GPUs

Q1: What GPUs are compatible with gpt-oss?

A1: gpt-oss-20b runs on consumer-level NVIDIA RTX GPUs with 16GB+ VRAM (e.g., RTX 4090/5090). The larger gpt-oss-120b requires high-memory professional GPUs such as NVIDIA H100 or A100 with 80GB VRAM.

Q2: Do I need an internet connection to run these models?

A2: No. Once downloaded, all models run completely offline on your hardware, ensuring privacy and uninterrupted access.

Q3: How does local performance compare to cloud-based AI?

A3: Running locally on an RTX 5090, gpt-oss-20b achieves around 256 tokens per second, often matching or exceeding cloud-based response times when factoring in network delays.

Q4: Can the models be fine-tuned for specific tasks?

A4: Yes. Methods like LoRA enable efficient fine-tuning on local hardware, allowing customization for specialized domains with much lower compute requirements.