Quantum‑Si to Participate in GenomeWeb Webinar: Proteomics—the large-scale study of proteins, their structures, and functions—is at the heart of modern life sciences. As the field grows, so does the complexity of the data it generates. That’s why proteomics data standardization is a hot topic in 2025, and why Quantum‑Si’s participation in the upcoming GenomeWeb webinar, “From Raw to Reusable: Considerations for Data Integration and Real-World Standardization in Proteomics,” is making waves across research and industry circles.

In this article, we’ll explore what proteomics data standardization means, why it matters, and how Quantum‑Si and its partners are shaping the future of this crucial field. Whether you’re a student, a seasoned scientist, or just curious, you’ll find practical advice, clear examples, and expert insights to help you understand and navigate the world of proteomics data.

Quantum‑Si to Participate in GenomeWeb Webinar

| Topic | Details |

|---|---|

| Event | GenomeWeb Webinar: “From Raw to Reusable: Considerations for Data Integration and Real-World Standardization in Proteomics” |

| Date | June 20, 2025 |

| Participants | Quantum‑Si, DNAnexus, Olink (Thermo Fisher Scientific), Northwestern University |

| Focus | Data integration, standardization, metadata annotation, reproducibility, and FAIR data principles |

| Key Data Standards | mzML, mzIdentML, mzQuantML, mzTab, SDRF-Proteomics, MIAPE |

| Career Impact | Improved data sharing, reproducibility, and collaboration; essential skills for bioinformatics and proteomics professionals |

| Official Website | Quantum‑Si |

Proteomics data standardization is transforming how scientists collect, analyze, and share information about proteins. By adopting common formats, rigorous metadata practices, and normalization methods, researchers can ensure their data is reproducible, reusable, and ready for the next big discovery. Quantum‑Si’s participation in the GenomeWeb webinar highlights the importance of collaboration and continuous learning in this rapidly evolving field.

Whether you’re just starting out or leading a research team, understanding and applying these standards is key to success in proteomics and beyond.

What Is Proteomics Data Standardization?

Proteomics data standardization means creating common rules and formats for collecting, storing, and sharing protein data. Imagine if every scientist used a different language to describe their experiments—sharing and comparing results would be almost impossible! Standardization ensures that data from different labs, instruments, and studies can be easily understood, reused, and trusted by everyone.

Why Is It Important?

- Reproducibility: Scientists need to repeat experiments and get the same results. Standardized data makes this possible.

- Data Sharing: Researchers around the world can combine their findings, leading to bigger discoveries.

- Efficiency: Saves time and money by reducing errors and making data analysis faster.

- Innovation: Enables new tools and technologies to work together smoothly.

The Challenges of Proteomics Data

Proteomics data is complex. Each experiment can generate millions of data points, with information about proteins, how they were measured, the equipment used, and much more. The variety of technologies, protocols, and study designs adds another layer of complexity.

Common Challenges

- Different Data Formats: Labs and instruments often use their own formats, making integration tricky.

- Metadata Gaps: Missing information about how data was collected or processed can make it useless to others.

- Volume and Variety: The sheer size and diversity of data make storage and analysis difficult.

How Is the Proteomics Community Solving These Problems?

1. Development of Standard Data Formats

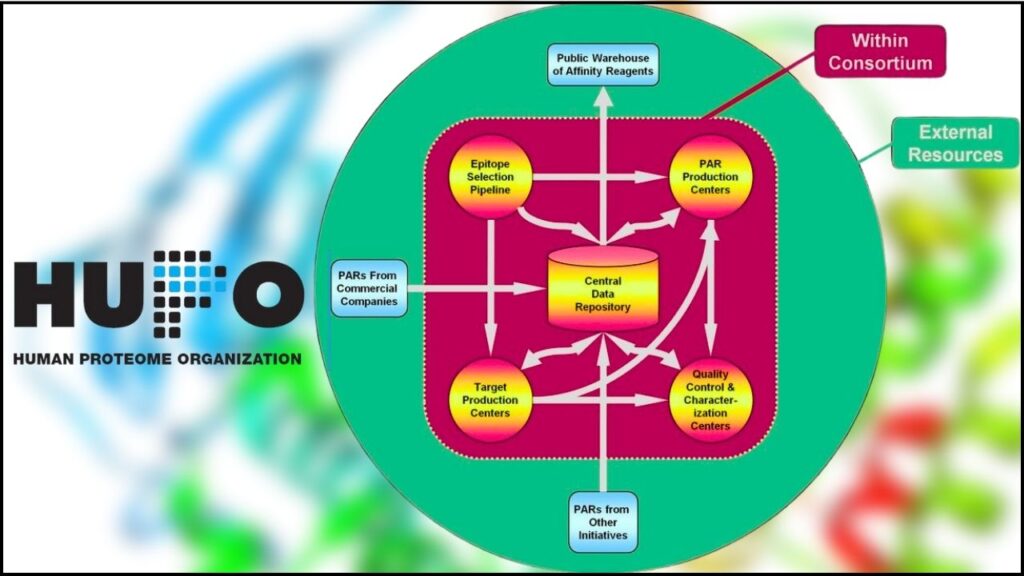

The Human Proteome Organization (HUPO) Proteomics Standards Initiative (PSI) has led the way in creating widely accepted data formats and guidelines. Here are some of the most important ones:

- mzML: For raw mass spectrometry data.

- mzIdentML: For peptide and protein identification results.

- mzQuantML: For quantification workflows.

- mzTab: For reporting proteomics and metabolomics results in a simple, tab-delimited format.

- SDRF-Proteomics: For describing experimental design and sample metadata in a standardized way.

- MIAPE: Minimum Information About a Proteomics Experiment—guidelines for what information must be reported.

These standards make it easier for researchers to share, compare, and reuse data, no matter where or how it was generated.

2. Metadata Annotation and FAIR Principles

Comprehensive metadata—information about how, when, and where data was collected—is crucial. The FAIR principles (Findable, Accessible, Interoperable, and Reusable) guide researchers to make their data as useful as possible for others.

For example, major proteomics data repositories now require submitters to include detailed experimental design and sample metadata using the SDRF-Proteomics format. This ensures that anyone accessing the data can understand and reuse it effectively.

3. Data Repositories and Knowledge Management

Standardized data is stored in public repositories, where it can be accessed and reused by researchers worldwide. Advanced Laboratory Information Management Systems (LIMS) help labs organize and connect their data, turning individual experiments into valuable knowledge bases.

Practical Guide: How to Standardize Proteomics Data

Step 1: Collect Comprehensive Metadata

- Record all details about your experiment: sample type, preparation method, instrument settings, software used, and more.

- Use controlled vocabularies to ensure consistency.

Step 2: Use Standard Data Formats

- Export raw data in mzML format.

- Store identification results in mzIdentML.

- Use mzQuantML for quantification workflows.

- Report results using mzTab or SDRF-Proteomics for experimental design.

Step 3: Normalize Your Data

Normalization adjusts your data to remove technical variations and make biological comparisons more accurate. Common methods include:

- Total Intensity Normalization: Assumes total protein amount is similar across samples; scales data accordingly.

- Median-Based Normalization: Adjusts data based on the median value for each sample.

- Quantile Normalization: Makes the distribution of values similar across samples.

Many modern software platforms offer built-in normalization tools to help researchers get the most reliable results.

Step 4: Submit to Public Repositories

- Prepare your data and metadata according to repository guidelines.

- Use available tools to create and annotate SDRF-Proteomics files.

- Deposit your data for public access and future reuse.

Step 5: Stay Updated and Collaborate

- Participate in community webinars and workshops, like the GenomeWeb event with Quantum‑Si, to stay informed about the latest standards and best practices.

- Collaborate with other researchers and organizations to improve and adopt new standards.

Real-World Example: Quantum‑Si and the Push for Standardization

Quantum‑Si, known for its single-molecule protein analysis technology, is joining forces with other industry leaders to advance data standardization. At the GenomeWeb webinar, experts will discuss:

- How to annotate and share proteomics data for maximum reproducibility

- Best practices for integrating data from different platforms

- Ways to participate in global standardization initiatives

- Tips for depositing data into public repositories and increasing its visibility

This collaborative approach ensures that proteomics research remains transparent, reliable, and impactful for years to come.

Cold Spray 3D‑Printing Technique Proves Effective For On‑Site Bridge Repair

PsiQuantum Study Reveals Roadmap For Loss-Tolerant Photonic Quantum Computing

Marvell Says Future of Cloud Computing Depends on Custom-Built Silicon Chips

FAQs About Quantum‑Si to Participate in GenomeWeb Webinar

What is proteomics?

Proteomics is the study of all the proteins in a cell, tissue, or organism. Proteins are the workhorses of biology, so understanding them helps us learn how living things function.

Why do we need data standards in proteomics?

Without standards, it’s nearly impossible to compare or combine data from different labs or studies. Standards make data sharing, analysis, and discovery much easier and more reliable.

What are some common data formats in proteomics?

Key formats include mzML (raw data), mzIdentML (identifications), mzQuantML (quantification), mzTab (results), and SDRF-Proteomics (metadata).

How do I normalize proteomics data?

Normalization methods like total intensity, median-based, and quantile normalization help remove technical biases, making your results more accurate.

Where can I store and share my proteomics data?

Public repositories are widely used for storing and sharing standardized proteomics data.

What are the benefits of standardization for my career?

Mastering data standards boosts your credibility, makes your research more impactful, and opens doors to collaborations and new opportunities in bioinformatics and proteomics.