Robots and Smart Glasses: A few years ago, the idea that robots and wearable glasses could recognize people instantly sounded like something from a Hollywood movie. But in 2025, it’s rapidly becoming part of reality.

From assisting people with memory loss to helping robots perform complex tasks without coding, this new tech ecosystem—where robots learn from what smart glasses see—is unfolding in labs, factories, and soon, maybe even in your home.

Let’s dive into what this means, how it works, and how it’s reshaping industries and society—without losing sight of ethics, privacy, and human impact.

Robots and Smart Glasses

| Feature | What It Does | Developer/Org | Why It Matters |

|---|---|---|---|

| EgoZero (robot learning system) | Robots learn tasks using human video footage from smart glasses | NYU & UC‑Berkeley | Enables zero-shot learning; 70%+ task success rate |

| Ray-Ban Meta Smart Glasses | Voice commands, object recognition, AI assistant, POV video/photo | Meta & EssilorLuxottica | Over 2 million units sold; first major consumer AI-glasses |

| Super-sensing facial recognition (Aperol/Bellini 2026) | Real-time people recognition and ambient AI | Meta (under development) | Raises key ethical and regulatory issues |

| I-XRAY hack demo (2024) | Unofficial facial ID using open-source tools | Harvard students | Showcased surveillance risks and policy gaps |

| GDPR & U.S. Privacy Laws | Legal guardrails for biometric surveillance | EU, California, Illinois | Regulates deployment of face-tracking tech |

Robots and smart glasses are no longer separate innovations—they’re converging. With systems like EgoZero, robots learn from human video like never before. Meanwhile, smart glasses are evolving from gadgets to real-time AI assistants that may soon recognize every person you meet.

This technology promises convenience, assistance, and automation. But it also brings complex ethical challenges, especially around privacy and consent. Whether you’re an engineer, teacher, or parent, staying informed is your best defense—and opportunity.

The line between science fiction and daily life is vanishing fast. What we do next will define how these tools shape our world.

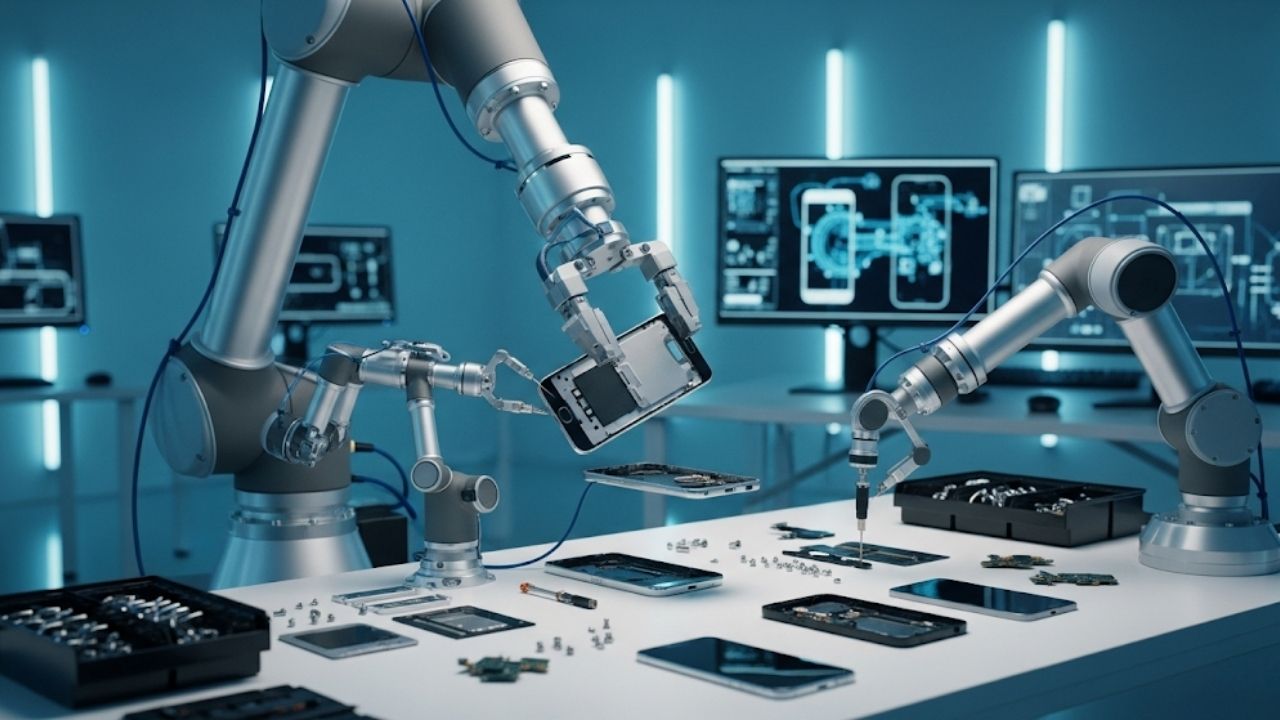

How Robots Learn Using Smart Glasses

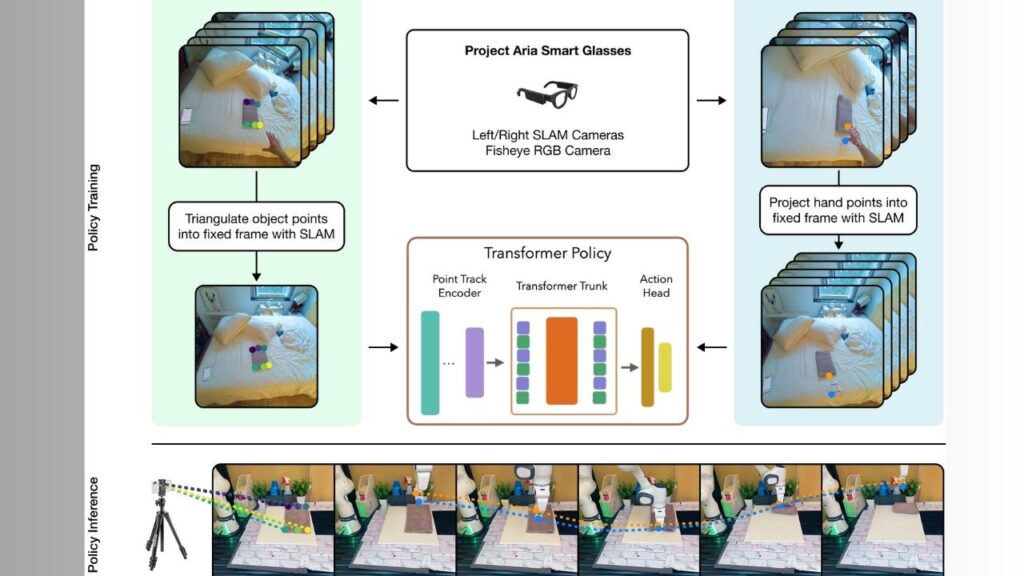

The EgoZero Innovation

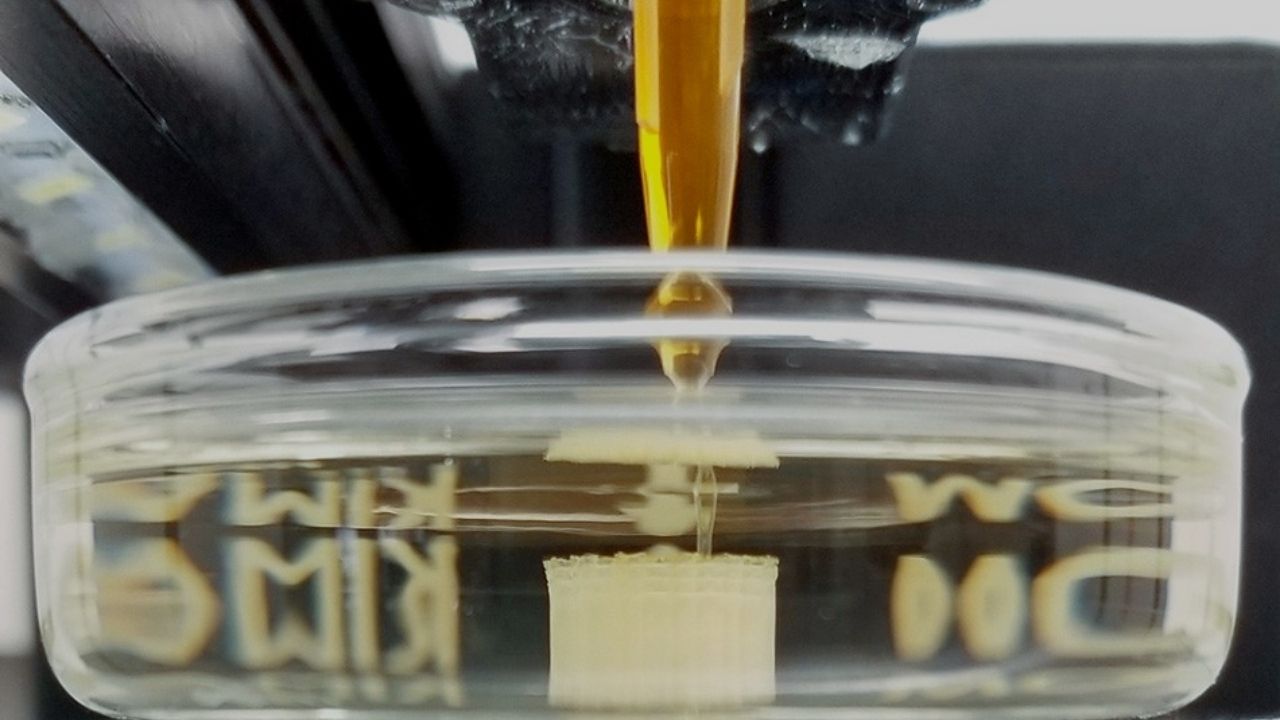

EgoZero is a groundbreaking system developed by researchers at New York University (NYU) and University of California, Berkeley, published on arXiv in May 2024. It allows robots to learn new tasks simply by watching humans perform them from a first-person perspective—no programming required.

Instead of traditional robotic training—which often takes days of trial-and-error—EgoZero uses 20–30 minutes of egocentric video captured using AI-enabled smart glasses. These glasses record visual scenes, hand movements, object locations, and human behavior from the user’s point of view.

A robotic arm (such as the Franka Emika Panda) then attempts the same task in the real world. Tasks like:

- Opening a microwave and placing a dish inside

- Sorting tools or toys into correct bins

- Folding clothes or wiping a surface

Results? Robots completed 70% of unseen tasks successfully using zero-shot learning, meaning they hadn’t encountered these specific actions before. That’s a game-changer for training robots at scale.

Technical Framework Behind EgoZero

The researchers combined:

- Large Language Models (LLMs) like GPT to understand human intent

- Visual Transformer Networks to process egocentric footage

- Diffusion Action Policies (DAP) to generate robot movement strategies

This architecture lets the robot generalize what it learns from one person to similar—but not identical—situations in the real world.

Practical use cases:

- Home assistance for elderly or disabled individuals

- Warehouse task automation with fewer engineers

- Fast deployment in disaster zones or temporary setups

Smart Glasses with Real-Time Face Recognition

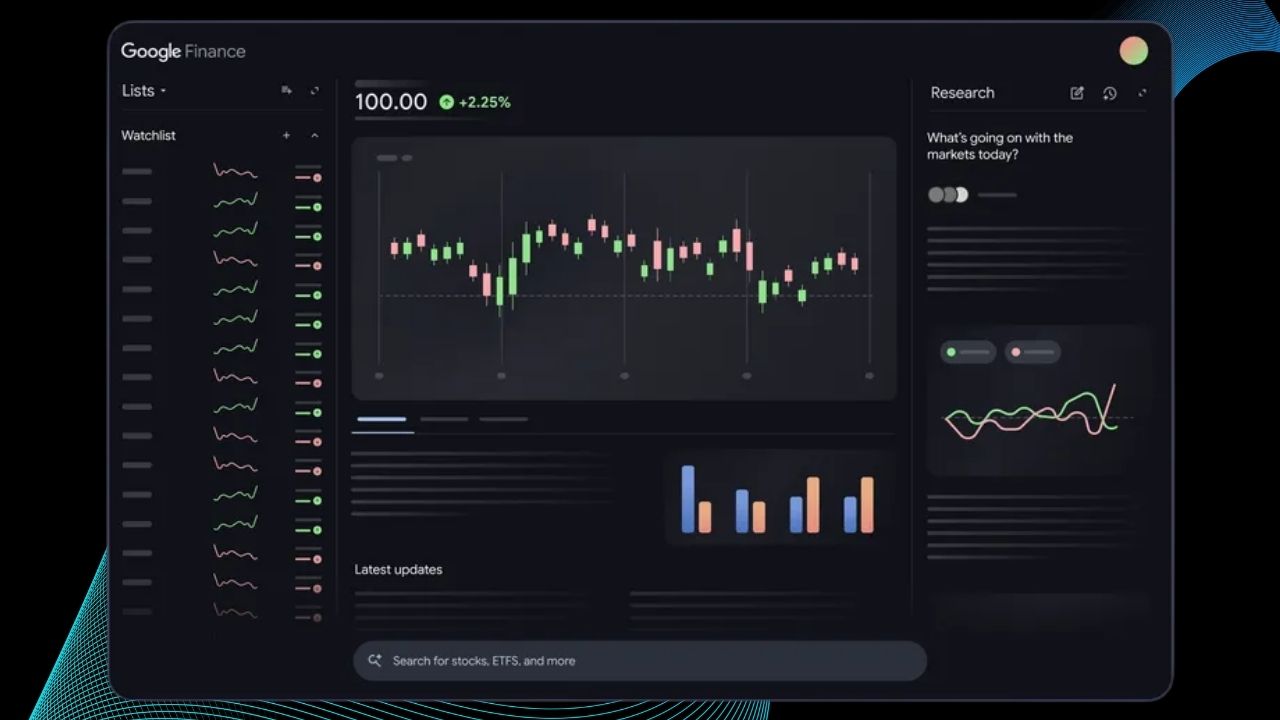

Meta’s Ray-Ban Smart Glasses

In late 2023, Meta (formerly Facebook) launched the second-generation Ray-Ban Meta Smart Glasses, developed in partnership with EssilorLuxottica. These glasses look like everyday sunglasses but include:

- Dual 12MP cameras

- Directional speakers

- Voice assistant powered by Meta AI

- 32GB storage

- Seamless Facebook/Instagram integration

Meta reported 2 million units sold by early 2025, driven by creators, travelers, and accessibility users. But what’s coming next may reshape how we see the world—literally.

What’s Coming in 2026: Aperol and Bellini

Codenamed Aperol and Bellini, Meta’s upcoming smart glasses (expected 2026) include features like:

- Super-sensing: Glasses interpret surroundings 24/7 using onboard AI

- Facial Recognition: Real-time ID of people you look at

- Ambient Awareness: Notices objects, people, and even your emotional tone

Internally, Meta is testing facial recognition to identify colleagues and family. The glasses do not currently offer this feature publicly due to legal hurdles, but its existence confirms the future direction of wearable tech.

How Facial Recognition Works in Wearables

Facial recognition in smart glasses combines multiple AI systems:

- Face Detection: Locating the human face in a live camera frame.

- Facial Feature Extraction: Mapping eyes, nose, cheekbones, and chin.

- Encoding: Translating these features into a digital “faceprint.”

- Matching: Comparing that print to a known face database.

- Decision: Returning a match result in milliseconds.

With advances in on-device AI, this entire pipeline now runs without internet access—meaning it’s both faster and potentially more private, depending on implementation.

Legal and Ethical Implications

The Harvard I-XRAY Hack

In 2024, a group of Harvard undergraduates built I-XRAY, a demo that combined smart glasses with open-source facial recognition libraries. It identified random pedestrians and showed their:

- Full names

- Social media accounts

- Location history (if public)

Though built as a research project, the demo sparked global outcry. Critics worried about misuse by governments, stalkers, or corporations.

Laws and Regulations

European Union: Under the General Data Protection Regulation (GDPR), facial recognition is considered sensitive biometric data. It’s restricted in public spaces without explicit consent.

United States:

- Illinois (BIPA): Strict consent required for biometric data use.

- California (CPRA): Grants rights to opt-out of facial recognition systems.

- Texas and others are following suit.

Failure to comply can result in fines, lawsuits, and bans. Meta has paused global facial recognition features in its products due to regulatory pressure.

Who Can Use This Tech—and How?

For Families and Educators

- Use smart glasses for accessibility—helping kids with autism or elderly parents recognize familiar people.

- Implement boundaries: teach about consent and responsible use.

- Disable camera when unnecessary.

For Businesses and Developers

- Use robot training systems like EgoZero to automate repetitive tasks.

- Keep humans in the loop: always validate AI actions in physical settings.

- Follow ISO/IEC 27001 standards for data privacy and cyber-security.

For Governments and Institutions

- Develop transparency mandates—users should always know when they’re being recorded or recognized.

- Invest in open-source alternatives to Big Tech’s models.

- Ensure AI systems are auditable and not black-boxes.

Real-World Use Cases

1. Healthcare Support:

Wearable AI glasses could help Alzheimer’s patients recognize family or remember their routines.

2. Disaster Response Robots:

Send a robot trained on human videos into a collapsed building to search for survivors or retrieve supplies.

3. Retail Logistics:

Use AI-trained robot arms in Amazon-like warehouses to organize inventory with no pre-coded logic.

4. Law Enforcement (Controversial):

Use facial recognition to identify suspects—but raises major ethical issues and risks of racial bias.

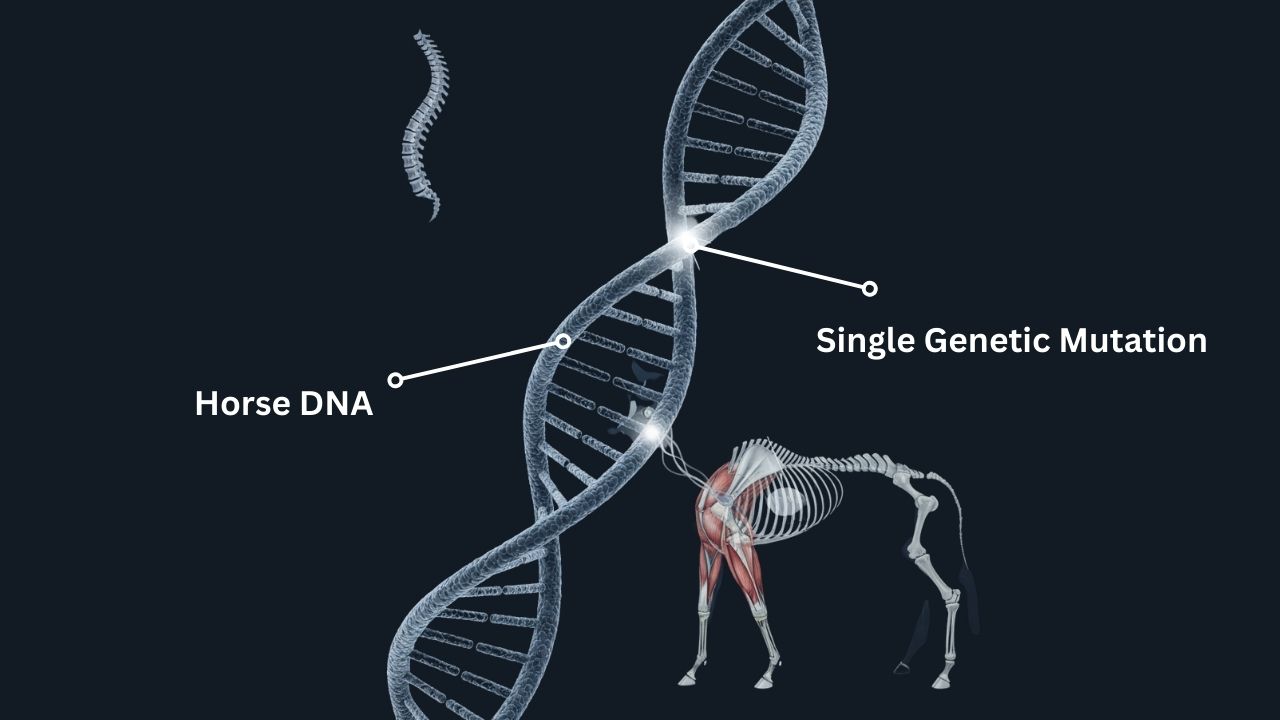

Scientists Use a Levitating Magnet to Search for Dark Matter — And It Might Actually Work

Russian Scientists Create Ultra‑Precise Sub‑Ångström Tech for Quantum Chips of Tomorrow

Meta Launches New AGI Lab to Dominate the Future of Artificial Intelligence

FAQs About Robots and Smart Glasses

Are facial recognition glasses already available?

Not to consumers. Meta’s current glasses don’t support real-time facial ID. Internal testing exists, but public release is restricted by laws.

How accurate is the technology?

In ideal conditions, over 95% accuracy is achievable. Factors like lighting, glasses, or masks can reduce reliability.

What’s the difference between EgoZero and traditional robot training?

Traditional robots are programmed for each task. EgoZero allows robots to learn general skills from human video—no specific programming needed.

Is this technology safe?

Technologically, yes. Socially, it depends on usage. Privacy laws and ethical practices are essential to prevent misuse.

Can I opt out of being identified in public by smart glasses?

In jurisdictions like the EU or California, yes. But enforcement depends on government action and manufacturer compliance.