Artificial intelligence (AI) is no longer just a futuristic concept — it’s transforming our daily lives and industries worldwide. From chatbots answering our questions to complex AI algorithms revolutionizing business, the technology is booming rapidly. But behind this digital revolution lies a hidden cost: an enormous surge in electricity consumption by the data centers powering these AI systems. This spike is already leading to higher electricity bills for millions and is reshaping how energy grids and utilities manage power.

In this article, we explore the deep connection between AI growth and electricity demand, the scale of this energy consumption, its impact on consumers, and potential paths toward a more sustainable AI-powered future. Whether you are a household consumer noticing a rise in bills, an energy professional, or simply curious about AI’s wider effects, this guide provides a clear, data-backed, and easy-to-understand overview.

Table of Contents

The AI Boom Is Burning Through Power

| Topic | Insight |

|---|---|

| Global AI data center energy consumption (2025) | Expected to reach approximately 200 terawatt-hours (TWh) — more than Belgium’s annual usage |

| U.S. data center electricity demand growth | Forecast to more than double by 2035, reaching around 78 gigawatts (GW) or about 8.6% of U.S. electricity demand |

| AI’s share of data center power consumption (2025) | Could reach nearly 50%, consuming about 23 GW — twice the entire Netherlands’ power use |

| Projected global electricity for data centers (2030) | Expected to double to 945 TWh, surpassing Japan’s current consumption, driven by AI |

| Challenges posed | Power grid strain, rising consumer electricity costs, urgent need for renewable energy and infrastructure upgrades |

| Official source for reference | International Energy Agency (IEA): https://www.iea.org/reports/energy-and-ai |

The AI boom is driving an unprecedented explosion in electricity demand, with data centers at the core of this surge. This growing appetite for power is already contributing to increased electricity bills for millions worldwide and straining grid infrastructures. Understanding the scale and nuances of AI’s energy consumption helps demystify this complex issue.

The good news is that industry awareness and technology advancements are pointing toward sustainability. Enhancing efficiency, expanding renewable energy, and modernizing power grids will be key to balancing AI’s enormous benefits with responsible energy use, ensuring this digital revolution can continue without compromising our planet or wallets.

Understanding the AI-Power Connection

At the heart of AI’s power consumption are data centers — massive complexes filled with racks of servers that carry out intense computations. These centers enable AI technologies by storing, processing, and analyzing vast amounts of data. Unlike average computing tasks, AI applications demand extraordinary amounts of processing power, frequently relying on specialized high-performance processors like GPUs (graphics processing units) and CPUs optimized for machine learning.

But it’s not just the computing chips themselves. Running these machines nonstop generates enormous heat, which requires energy-intensive cooling systems to keep everything in safe operating temperatures. Consequently, cooling systems can consume nearly as much electricity as the servers themselves.

Why Is AI Energy Consumption Exploding?

Several factors drive this steep rise in AI-related electricity use:

- Rapid Growth of AI Applications: From natural language processing and image recognition to generative AI models powering chatbots, AI’s reach is exploding across sectors, increasing demand for computational power.

- Increasing Model Complexity and Size: Cutting-edge AI models like GPT-4 involve training phases lasting weeks or months, using megawatts of power continuously.

- Constant Real-Time AI Use: Many AI services operate 24/7 to deliver instant responses, recommendations, and analysis.

- Construction of New AI Data Centers: Companies worldwide build new data centers designed specifically to support AI workloads, leading to dramatically higher electricity demand in their locales.

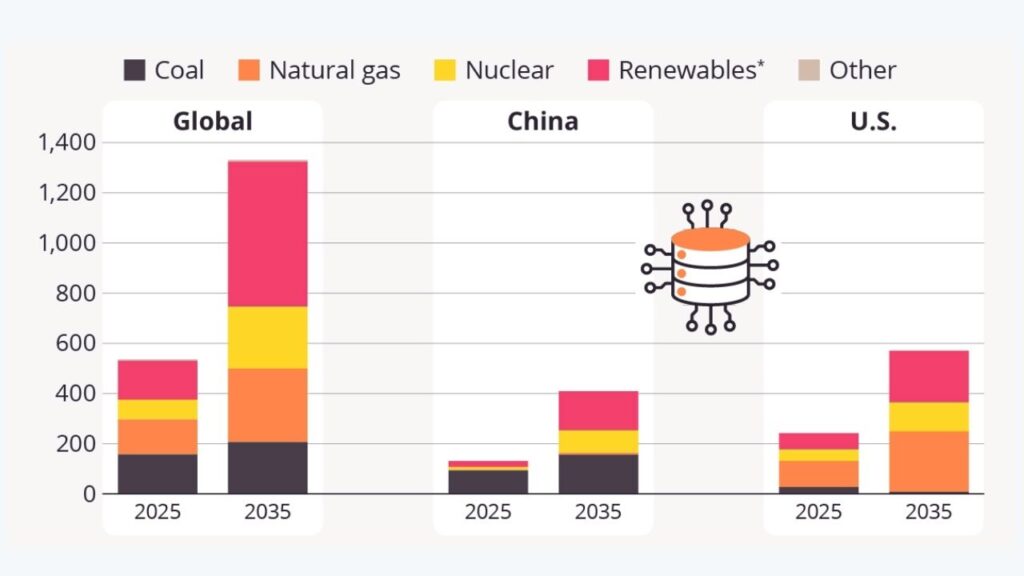

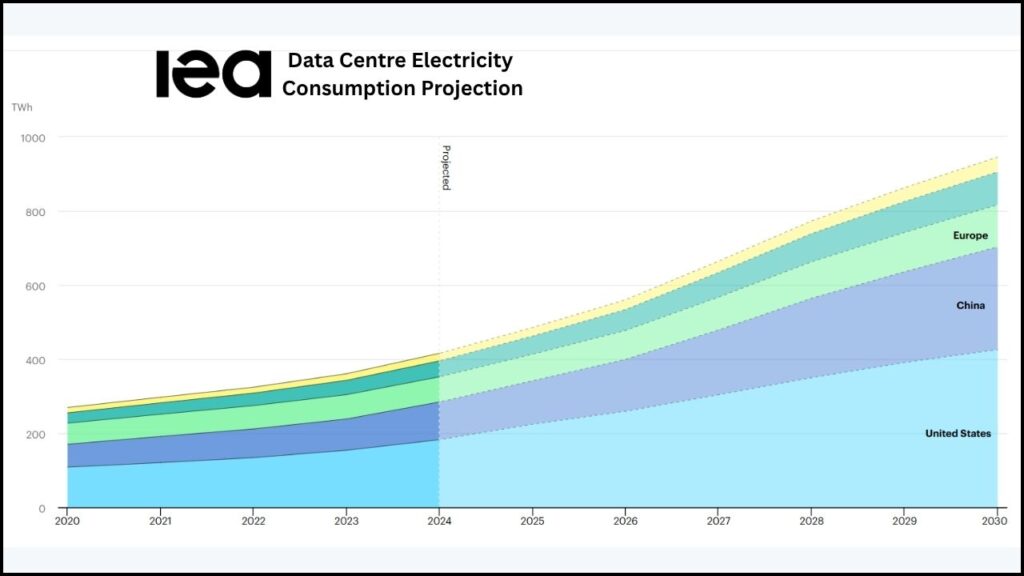

The International Energy Agency (IEA) projects that electricity demand from data centers worldwide will more than double by 2030 to nearly 945 TWh, a quantity greater than Japan’s entire electricity consumption today. AI will be the primary driver, with AI-optimized data centers expanding their electricity use more than fourfold during the same period. In the United States, data centers could account for almost half of the country’s electricity demand growth by 2030, overtaking even the manufacturing sector’s energy use.

The Scale of AI’s Energy Footprint Today

As of 2025:

- AI-related systems consume around 200 TWh globally, outpacing the energy use of many countries.

- AI could make up nearly half of all data center electricity consumption, drawing about 23 gigawatts (GW) — equivalent to the power use of a small country like the Netherlands.

- Power demand from AI data centers is growing roughly four times faster than new electricity is being added to grids, raising sustainability and infrastructure concerns.

How Does This Affect Your Electricity Bill?

Most consumers may not realize it, but this surge in AI data center power demand has cascading effects on everyday electricity bills:

- Higher Electricity Rates: Utilities must invest billions in upgrading power grids, building new plants, and managing energy supply volatility. These costs often get passed down to residential and business customers.

- Infrastructure Upgrades: New investments to support the high availability and reliability required by AI data centers drive up overall system costs.

- Localized Impact: Regions with many data centers, such as parts of Pennsylvania, have seen residential utility bills climb by up to 20% in recent years due to the higher power demand.

For example, cities like Trenton, Philadelphia, Pittsburgh, and Columbus are experiencing noticeable electricity price hikes linked to the expansion of local AI-focused data centers, impacting millions of residents.

Breaking Down the Energy Demand of AI Data Centers: A Step-By-Step Guide

1. Data Center Components and Their Power Needs

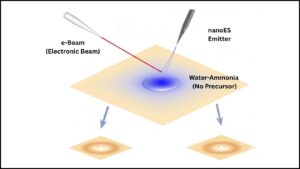

- Servers and GPUs: The core computational units, AI training, and inference require powerful processors that consume massive amounts of electricity.

- Cooling Infrastructure: Essential for maintaining temperatures and preventing equipment failures. Cooling systems can be nearly as energy-intensive as the servers they protect.

- Networking and Storage: While less intensive than compute and cooling, these components also contribute to total electricity consumption.

2. Estimating Power Consumption

- AI servers can consume up to 30 times more power than traditional servers executing standard computing tasks.

- By 2026, global energy consumption by AI data centers is expected to reach roughly 90 TWh, nearly a tenfold increase compared to just a few years earlier.

- In early 2024 alone, AI data centers added about 2 GW of power demand, showing accelerating trends.

3. Infrastructure Challenges

The energy sector faces growing pains as AI surges:

- Reliability Needs: Data centers require 24/7 continuous power with minimal disruption.

- Carbon-Neutral Goals: Many companies strive to use renewable energy, but only about half of current data center electricity is from clean sources.

- Grid Stability: Concentrated data center clusters push local grids to limits, requiring smart management and upgrades.

4. Future Projections

- By 2030, data centers globally might consume over 1,000 TWh; failure to improve energy efficiency could drive this upwards of 1,300 TWh.

- The U.S. data center demand may double by 2035, consuming around 78 GW, nearly tripling current figures.

- AI workloads will increasingly represent over 40% of total data center power consumption.

What Can Be Done? Practical Steps Toward Sustainable AI Energy Use

Addressing AI-related electricity surges involves collaborative efforts among tech companies, energy providers, policymakers, and consumers:

- Energy Efficiency Improvements: Developing more efficient AI models and better hardware can lower power needs per computation.

- Renewable Energy Commitments: Many leading tech firms already purchase or generate clean energy for their data centers.

- Grid Modernization and Storage: Upgrading infrastructure for higher loads and integrating energy storage solutions improve stability.

- Distributed AI Computing: Edge computing and decentralized services can reduce long-distance data transfers, optimizing energy use.

- Consumer Awareness and Conservation: Simple energy-saving habits at home and businesses contribute to overall demand control.

America’s Largest Power Grid Feels the Strain as AI Energy Demands Keep Growing

Innovative Drill Technology May Offer a Sustainable Energy Solution

FAQs About The AI Boom Is Burning Through Power

Q1: Why does AI use so much electricity?

AI requires continuous, high-powered processing for model training and real-time operations. This entails running energy-intensive processors and cooling systems non-stop.

Q2: Are some data centers more power-hungry than others?

Yes. AI-focused data centers consume significantly more electricity than traditional ones due to intensive GPU and CPU operations and increased cooling needs.

Q3: When will AI dominate data center energy use?

Projections show AI could account for nearly half of data center power consumption by the end of 2025, with this share growing as AI adoption expands.

Q4: Can data centers run entirely on renewable energy?

While many aim for 100% renewable usage, currently about half of data center electricity comes from renewable sources, with scaling challenges ongoing.

Q5: How does this impact everyday consumers?

The increased electricity demand translates into higher utility rates and bills due to the costs of infrastructure upgrades and power supply management.