Vision-Language AI Models: Vision-Language AI Models are making headlines in 2025 for a remarkable breakthrough: they can now learn spatial skills—like understanding where objects are in a room or how far apart things are—without needing explicit 3D data. This leap forward is transforming fields from robotics to education, making AI smarter, more flexible, and more accessible than ever before.

Let’s dive into how this works, why it matters, and what it means for both beginners and professionals in the world of artificial intelligence.

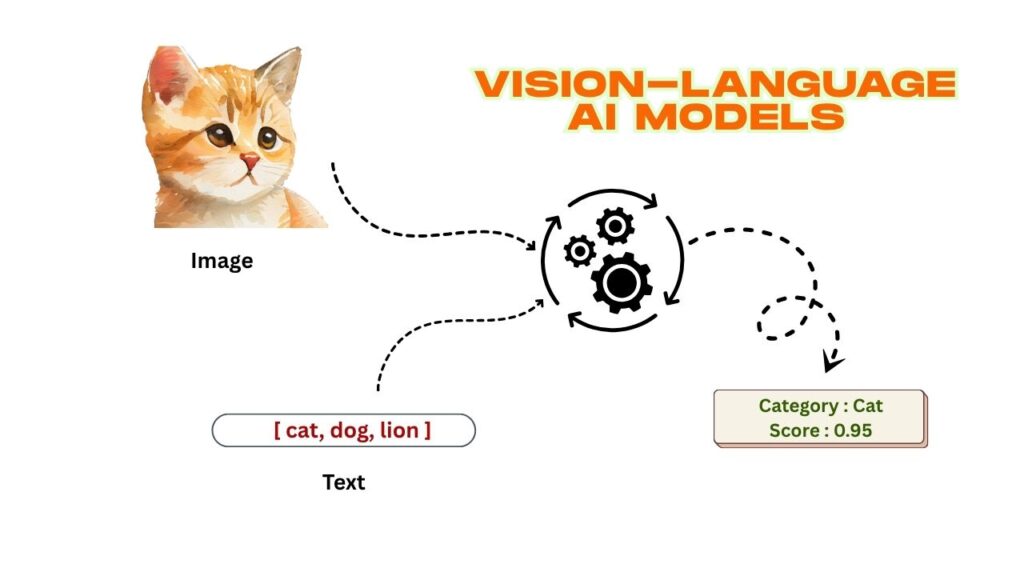

Vision-Language AI Models

| Feature/Fact | Details |

|---|---|

| What’s new? | Vision-Language Models (VLMs) now learn spatial reasoning without 3D data |

| How? | Use of synthetic datasets, plugins, and clever prompting strategies |

| Performance | Up to 49% improvement on key spatial reasoning benchmarks |

| Applications | Robotics, navigation, AR/VR, education, accessibility |

| Career Impact | Opens new roles in AI, robotics, data science, and education |

| Official Resource | arXiv.org |

The ability for Vision-Language AI Models to learn spatial skills without explicit 3D data is a game-changer. By using synthetic datasets, smart plugins, and innovative prompting, these models are bridging the gap between human and machine spatial understanding. The impact is already being felt in robotics, education, accessibility, and beyond. As research continues, expect even smarter, more adaptable AI that can “see” and “understand” the world almost like we do.

What Are Vision-Language Models and Why Do Spatial Skills Matter?

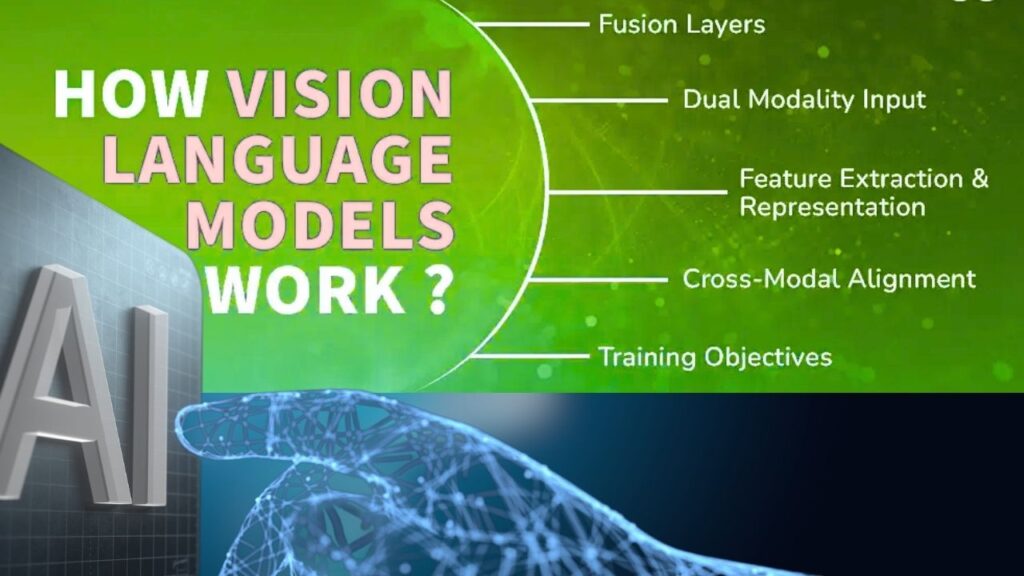

Vision-Language Models (VLMs) are a type of artificial intelligence that can both “see” (process images or video) and “read” (understand text). Imagine a robot that can look at a photo and answer questions about it, or an app that can describe what’s happening in a video. These models are the backbone of many modern AI systems.

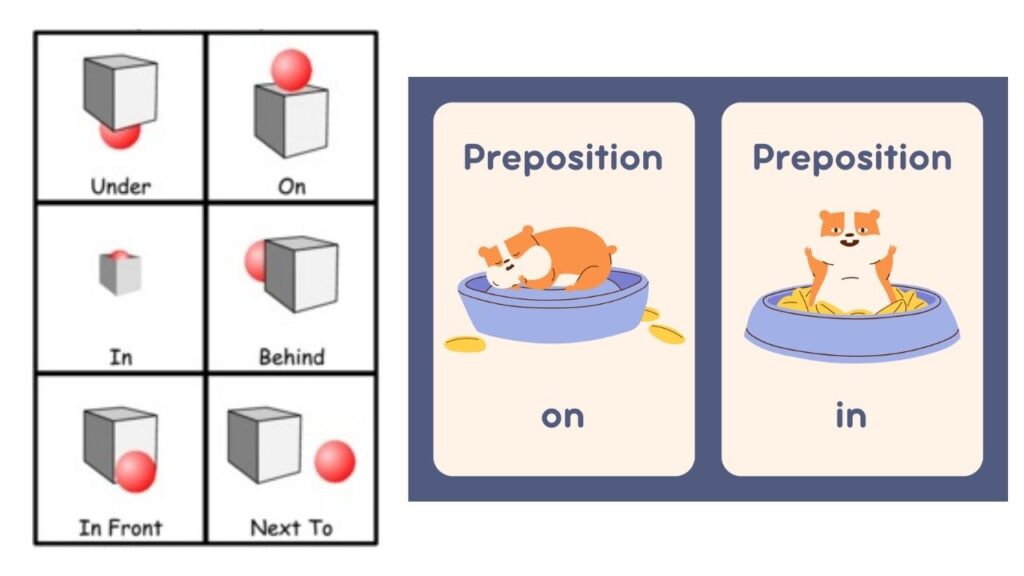

Spatial skills are the abilities that help us understand where things are, how far apart they are, and how they relate to each other in space. For humans, these skills are second nature. For AI, they’ve been a big challenge—especially without detailed 3D maps or special sensors.

The Old Way: Why Did AI Need 3D Data?

Until recently, teaching AI about space and position required explicit 3D data. That means giving the AI detailed maps, point clouds, or depth information—like what you get from a laser scanner or a fancy camera. This approach works, but it’s expensive, slow, and not always practical.

- 3D data is hard to get: You need special equipment, lots of storage, and hours of processing.

- Not scalable: You can’t easily collect 3D data for every possible environment or scenario.

- Limited flexibility: AI trained this way struggles to generalize to new places or tasks.

The Breakthrough: Learning Spatial Skills from 2D Data

Now, thanks to new research, VLMs can learn spatial reasoning using only 2D images, videos, and clever data tricks. Here’s how:

1. Synthetic Datasets Focused on Spatial Reasoning

Teams have created massive synthetic datasets—over 3.4 million question-answer pairs—focused specifically on spatial relationships. These datasets use detailed image descriptions and clever generation techniques to “teach” AIs about spatial concepts, even when real-world data is lacking.

Example:

A synthetic question might be, “Is the red ball to the left or right of the blue box?” The AI learns to answer using only the image and text, no 3D map required.

2. Smart Plugins and Prompting Strategies

Other models use plugin modules that add depth information or regional prompts to existing VLMs. This helps the AI focus on where things are, not just what they are.

Example:

A plugin might highlight a specific area in an image and ask, “What is near this spot?”—helping the AI learn about proximity and direction.

3. Intrinsic Spatial Reasoning

Some frameworks boost spatial reasoning by simply rephrasing the AI’s instructions. For example, before asking the AI to pick up an object, it first asks, “In which direction is the object relative to the robot?” This simple trick helps the AI pay attention to spatial details and improves its performance—without any extra training data or complex models.

How Well Does It Work? The Data and Results

- Performance Gains:

Models trained on synthetic spatial datasets saw up to a 49% improvement on spatial reasoning benchmarks, compared to older models. - Generalization:

These new models don’t just memorize answers—they actually understand spatial relationships, allowing them to perform well in both simulated and real-world environments. - Benchmarks:

New tests include indoor, outdoor, and simulated scenes, making sure the AI’s skills are truly robust. - Remaining Challenges:

Despite big improvements, even the best models sometimes struggle with complex or ambiguous spatial questions. On average, accuracy across top models is still close to random chance for the hardest spatial tasks, showing there’s room for growth.

Why Does This Matter? Real-World Applications

Robotics:

Robots can now navigate, pick up objects, and interact with their environment more naturally—without needing expensive 3D sensors.

Navigation and Mapping:

AI-powered navigation systems (think self-driving cars or delivery drones) can better understand their surroundings using only cameras and basic maps.

Education:

Learning tools can help students visualize and understand spatial concepts, making subjects like geometry and geography more interactive.

Accessibility:

Apps for the visually impaired can describe spatial layouts (“The chair is to your left”) using only a smartphone camera.

Augmented and Virtual Reality:

Games and training simulations can create more realistic, interactive experiences without needing detailed 3D scans of every environment.

Step-by-Step Guide: How Vision-Language Models Learn Spatial Skills

Step 1: Collect or Generate Data

- Use images, videos, and detailed text descriptions.

- Generate synthetic questions about spatial relationships (e.g., “Is the cat under the table?”).

Step 2: Train the Model

- Feed the model pairs of images and questions.

- Use plugins or prompts to focus the model’s attention on spatial details.

Step 3: Test and Benchmark

- Evaluate the model using spatial reasoning benchmarks.

- Compare performance on tasks like navigation, object manipulation, and visual question answering.

Step 4: Deploy in Real-World Applications

- Integrate the model into robots, apps, or educational tools.

- Continue to monitor and improve performance as new data becomes available.

Practical Advice for Professionals

- Stay Current:

Follow the latest research on platforms like arXiv.org and top AI conferences. - Experiment with Plugins:

Try adding spatial reasoning plugins to your existing VLMs to boost performance without retraining from scratch. - Leverage Synthetic Data:

Use or create synthetic datasets focused on spatial reasoning to fill gaps in your training data. - Benchmark Your Models:

Regularly test your models on spatial reasoning benchmarks to identify strengths and weaknesses. - Think Beyond 3D:

Remember that you don’t always need expensive 3D sensors—clever use of 2D data and language can go a long way.

New Framework Offers Microscopic, Highly Precise Energy Control for Advanced Nanoscale Devices

World’s Thinnest Silicon-Based Material Unveiled, Opening New Frontiers for Next-Gen 2D Electronics

FAQs About Vision-Language AI Models

Q: Can these models really replace 3D sensors in robotics?

A: For many tasks, yes. While 3D sensors are still best for precise measurements, modern VLMs can handle navigation and object interaction using only cameras and smart data.

Q: Are these models available for public use?

A: Yes! Many models, datasets, and benchmarks are open-source and available on popular AI and code-sharing platforms.

Q: How accurate are these models?

A: Top models show up to 49% improvement on spatial reasoning benchmarks, but complex spatial questions remain challenging, with average accuracy still needing improvement.

Q: What careers benefit from this technology?

A: AI researchers, robotics engineers, data scientists, educators, and accessibility specialists all stand to gain from advances in spatially-aware AI.

Q: Where can I learn more?

A: Explore the latest news and resources on AI research forums and reputable technology news outlets.