Cybersecurity experts and organizations worldwide are sounding the alarm: WormGPT is back, and this time, its new artificial intelligence (AI) variants are even more dangerous than before. In 2025, cybercriminals have reimagined WormGPT, transforming it from a niche hacker tool into a powerful, easily accessible weapon that threatens businesses and everyday people alike. So, what is WormGPT, why has its resurgence caused such concern across the tech community, and—most importantly—what can be done to defend against this next wave of AI-driven cyber threats? Read on for everything you need to know.

Table of Contents

WormGPT Is Back

| Key Point | Detail & Data |

|---|---|

| WormGPT Variants in 2025 | Notable: xzin0vich-WormGPT (Mixtral-powered), keanu-WormGPT (Grok-powered) |

| Capabilities | Unlimited text and code generation, persistent contextual memory, multilingual, user-tailored output |

| User Base | Underground forums & Telegram groups estimated 7,500+ active members; low-cost access (from ₹10,000/year) |

| Some Features | Secure channels, code import/export, crypto payments, no user logs, persistent chat memory |

| Top Risks | Evasive phishing, rapid-custom malware, mass-scale fraud, business email compromise, social engineering |

| Professional Security Advice | Focus on behavioral threat analytics; regular user education; robust authentication protocols |

| Official Resource | Cato Networks: WormGPT AI Variants Threat Report |

WormGPT’s rebirth in 2025 marks a dangerous new era for digital security. By blending AI’s rapid progress with the underworld’s creativity, threat actors now possess unprecedented power. What used to be the realm of skilled hackers is now open to anyone with a small budget, a cryptocurrency wallet, and an internet connection. Your best defense isn’t panic—it’s preparedness: embrace new security technologies, train your people well, and keep learning as the threat landscape evolves.

What Exactly Is WormGPT — And Why Is It Threatening?

WormGPT began as a “blackhat” AI—essentially, a stripped-down copy of well-known language models like ChatGPT, but with all safety features and ethical boundaries removed. This allowed cybercriminals to harness AI for creating highly convincing phishing emails, malicious code, business scams, and more.

In 2025, the game has changed dramatically. Cybercriminals now hijack advanced, mainstream language models and retool them into WormGPT variants that are smarter, faster, and more adaptable. The most dangerous examples—xzin0vich-WormGPT and keanu-WormGPT—use top-tier AI engines (Mixtral and Grok, respectively), giving attackers:

- Full control over the content, language, and tone of their attacks

- Persistent “memory” of ongoing chats for complex scams

- Customization for multiple targets across languages and regions

- Stealth and privacy: No logs, crypto-only payment, secure access channels

Why is this so worrisome? Because these new WormGPTs erase the line between professional hackers and ordinary people—making sophisticated cyberattacks accessible to almost anyone, at very low cost.

Deep Dive: How WormGPT Variants Operate (Step by Step)

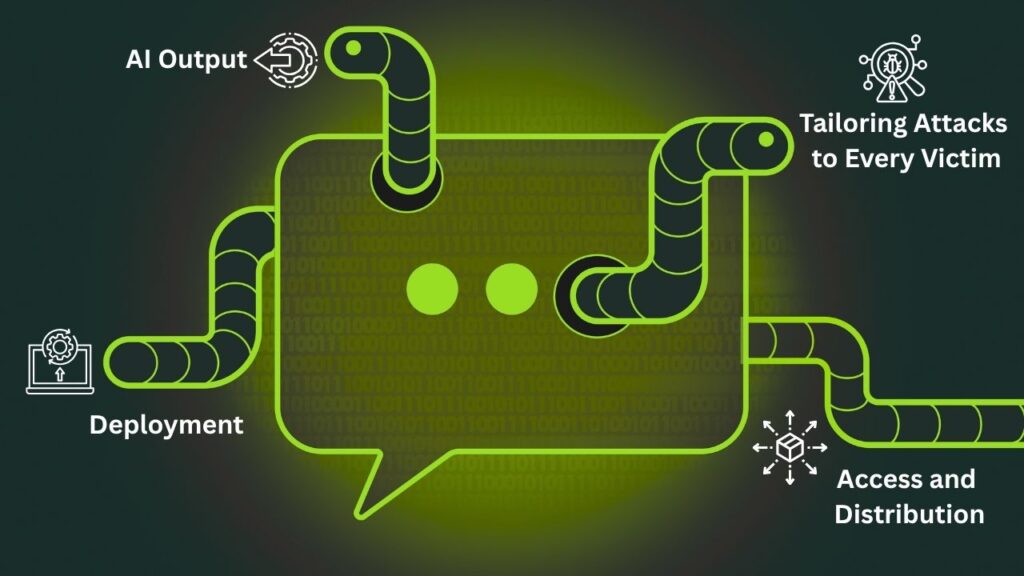

1. Access & Distribution: Fast, Easy, and Anonymous

- Where are they sold? On secret Telegram channels, darknet forums, and invite-only chat groups.

- Who can buy them? Anyone willing to pay (often via cryptocurrency)—no coding expertise needed.

- How much do they cost? Subscriptions start under ₹10,000/year, with lifetime deals under $300.

2. Tailoring Attacks to Every Victim

- Attackers input specific goals: “Write a phishing email to a tech CEO,” “Build ransomware,” “Impersonate a school principal.”

- Zero ethical limits: These chatbots will generate anything, no matter how harmful.

- Language and style: Choices range from professional to friendly to urgent, and nearly every major language is offered.

- Context-aware: The AI remembers the entire chat session, allowing step-by-step planning (e.g., for multi-stage social engineering attacks).

3. AI Output: Fast, Flawless, and Unique

- Polymorphic text and code: Each generation is new—no two attacks look the same, defeating pattern-matching defenses.

- Fully formed scripts and emails: Even beginners get high-quality results, ready to copy and use immediately.

- Export/import tools: Advanced users can automate workflows or generate bulk content for massive campaigns.

4. Deployment: Global and Scalable

- Mass attacks: In minutes, thousands of scam emails or phishing sites can be launched.

- Ecosystem support: Private “help desk” groups offer guidance, templates, or updates, making attacks even easier for newcomers.

Why Are Today’s WormGPT Variants So Dangerous?

Super-Realistic Phishing & Social Engineering

The latest WormGPTs use advanced AI to generate messages that not only sound human—they’re contextually perfect, mimicking companies, colleagues, or even family members with ease. Grammar mistakes and awkward wording, once the giveaway of a scam, have vanished.

Example:

A finance team receives an urgent message, seemingly from their CEO, with flawless details about an “emergency payment.” Even experienced staff can’t easily tell it’s fake if they only check the sender’s display name.

Automatic, Adaptive Malware Generation

Traditional virus detection tools rely on “fingerprints”—known patterns. WormGPTs create one-of-a-kind malware every time. This “polymorphism” means even up-to-date antivirus tools can miss new threats, at least initially.

Example:

WormGPT is instructed to generate ransomware. The code is unique, written differently every time, and easily customized for different operating systems or ransom messages.

Lowering the Technical Barriers

In the past, orchestrating major cyberattacks required extensive programming knowledge. Now, almost anyone with money and basic internet skills can become a threat actor, simply by prompting a chatbot.

Speed, Scale, and Anonymity

Mass attacks that once took weeks to coordinate now happen in minutes. No logs and cryptocurrency-only payments mean law enforcement faces immense challenges tracking these new cybercriminals.

Professional Analysis: Why the Cybersecurity Community Is Alarmed

Threat experts worldwide agree:

- The accessibility of WormGPT variants means millions of new potential hackers could join the scene overnight.

- Automation driven by AI enables attacks at a scale previously unimaginable—personalized, region-specific, and even speaking the target’s native language with perfection.

- Evasion tactics are built in—unique outputs slip past traditional spam filters and antiviruses, nullifying old-school protection methods.

- Fraud and scam campaigns, both individual and large-scale (like business email compromise), are far more convincing—and profitable—than ever.

The Verdict:

The rise of AI kits like WormGPT isn’t just an evolution; it’s a revolution in cybercrime.

How to Defend Against WormGPT and AI-Driven Threats: Authoritative Guidance

1. Prioritize Behavioral Threat Detection

- Invest in modern threat detection systems that flag unusual activity (like login patterns, rapid mail sending, or odd internal communications), rather than just blocked keywords or signatures.

2. Upgrade Email Authentication

- Use advanced protocols—like DMARC, SPF, and DKIM—to verify digital signatures and help your organization spot impersonators before harm is done.

3. Continuous User Education

- Run regular training sessions for staff, students—including young users—on recognizing abnormal messages and requests.

- Simulate phishing attacks to build “muscle memory” for spotting suspicious communications.

4. Stay Informed via Reputable Threat Intelligence

- Monitor developments in cybersecurity from established threat analysts and vendors.

- Subscribe to official reports (like the Cato Networks Threat Report) for real-world case studies and guidance.

5. Security Best Practices for All

- Enforce multi-factor authentication (MFA) on all sensitive accounts.

- Keep software and devices up to date—patching old vulnerabilities is crucial.

- Use enterprise “endpoint detection and response” (EDR) to identify odd behaviors at the device level.

Recognizing WormGPT-Based Attacks: Simple Signs Everyone Can Spot

- Unusual language, context, or requests (“Email me that password—quick!” or “Wire funds here now!”).

- Mismatched sender addresses—the display name might say “Boss,” but the address is odd.

- Links or downloads—never click unless 100% sure; impersonation links can be visually identical to bank or corporate sites.

- Too-perfect grammar and tone: Modern scams are polished, but context can give them away.

Mamona Ransomware: The Silent Offline Threat Everyone Should Take Seriously

GPUHammer Exploit Targets NVIDIA GPUs, Threatens AI Model Integrity With Memory Flaw

FAQs About WormGPT Is Back

Q1: Is it illegal to use WormGPT or similar tools?

Absolutely. Generating, selling, or using these tools for real-world attacks is a criminal offense globally, leading to severe penalties.

Q2: Can traditional security software defeat WormGPT-based attacks?

Legacy antivirus and spam filters alone aren’t enough—because these AIs constantly alter their output, so signature-based tools fall behind. Modern, AI-driven security and behavior-based analytics are much more effective.

Q3: Who should be worried—businesses or individuals?

Both. Organizations are a prime target for profit-motivated attacks, but individuals (even kids and the elderly) can be targeted and harmed through realistic social engineering.

Q4: How can I spot a phishing email that might be AI-generated?

Look for context clues: urgent and unexpected requests, vague greetings, tiny address differences, or pressure to act fast. If in doubt, confirm using another method (like a phone call).

Q5: Are even experts at risk?

Yes—many top security professionals have reported almost falling for AI-generated attacks because the content is now so sophisticated and realistic.